Conversation Analysts

Overview

Conversation Analysts enable you to generate AI-powered metrics for both AI Agent and human agent conversations in Quiq.

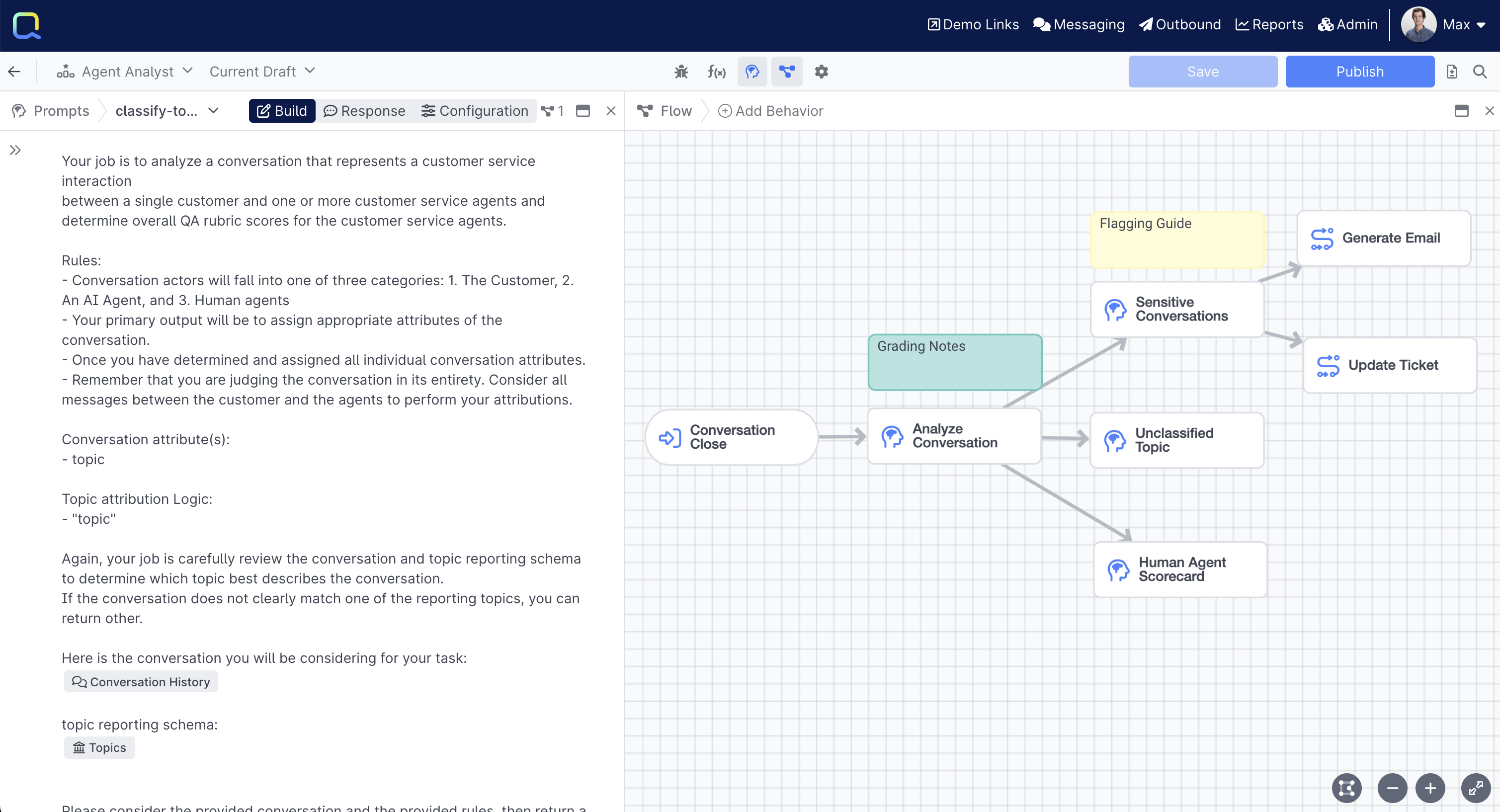

Conversation Analysts are integrated into your experience via Conversation Rules, and run once a conversation closes.

Getting Started

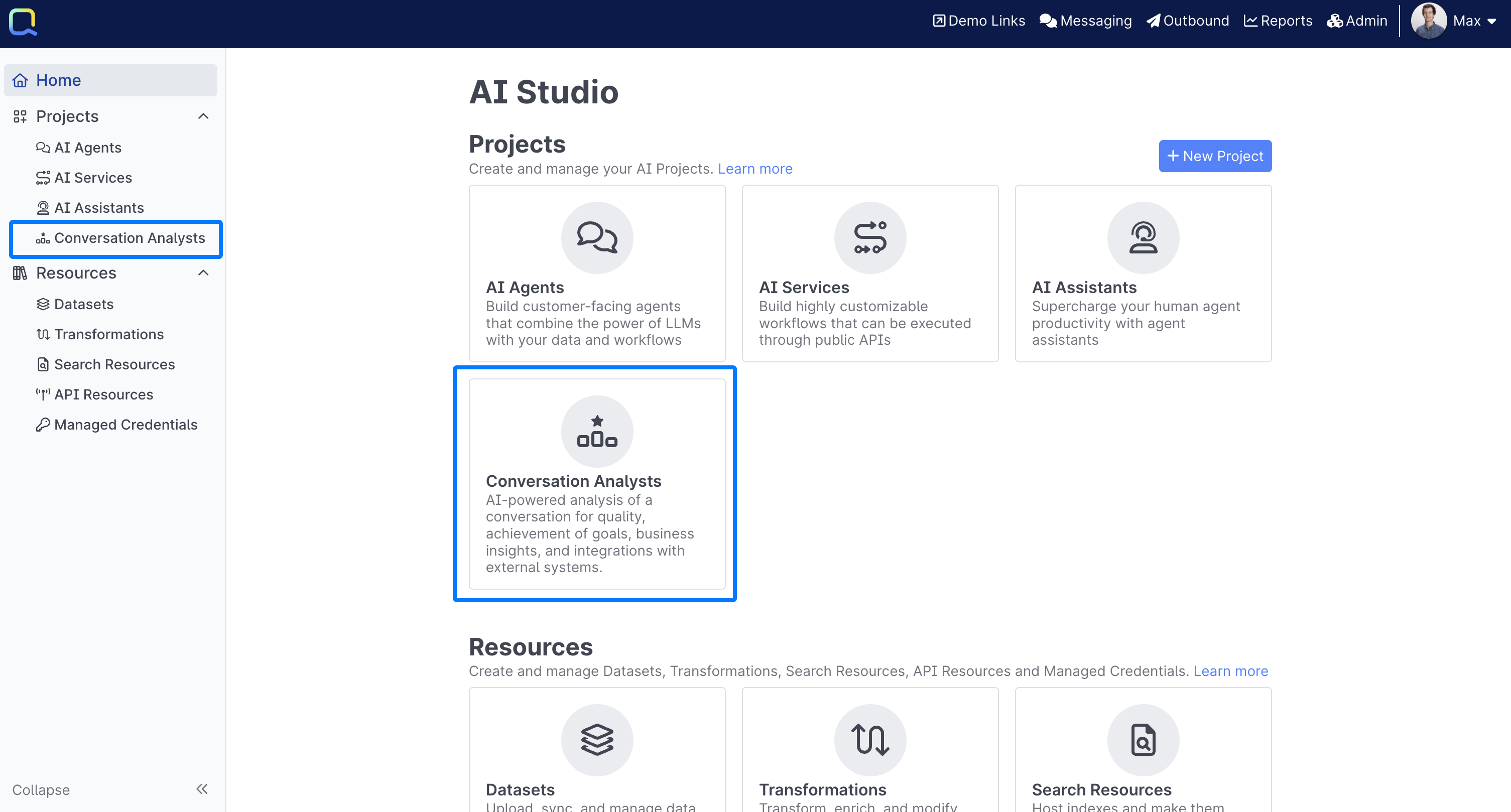

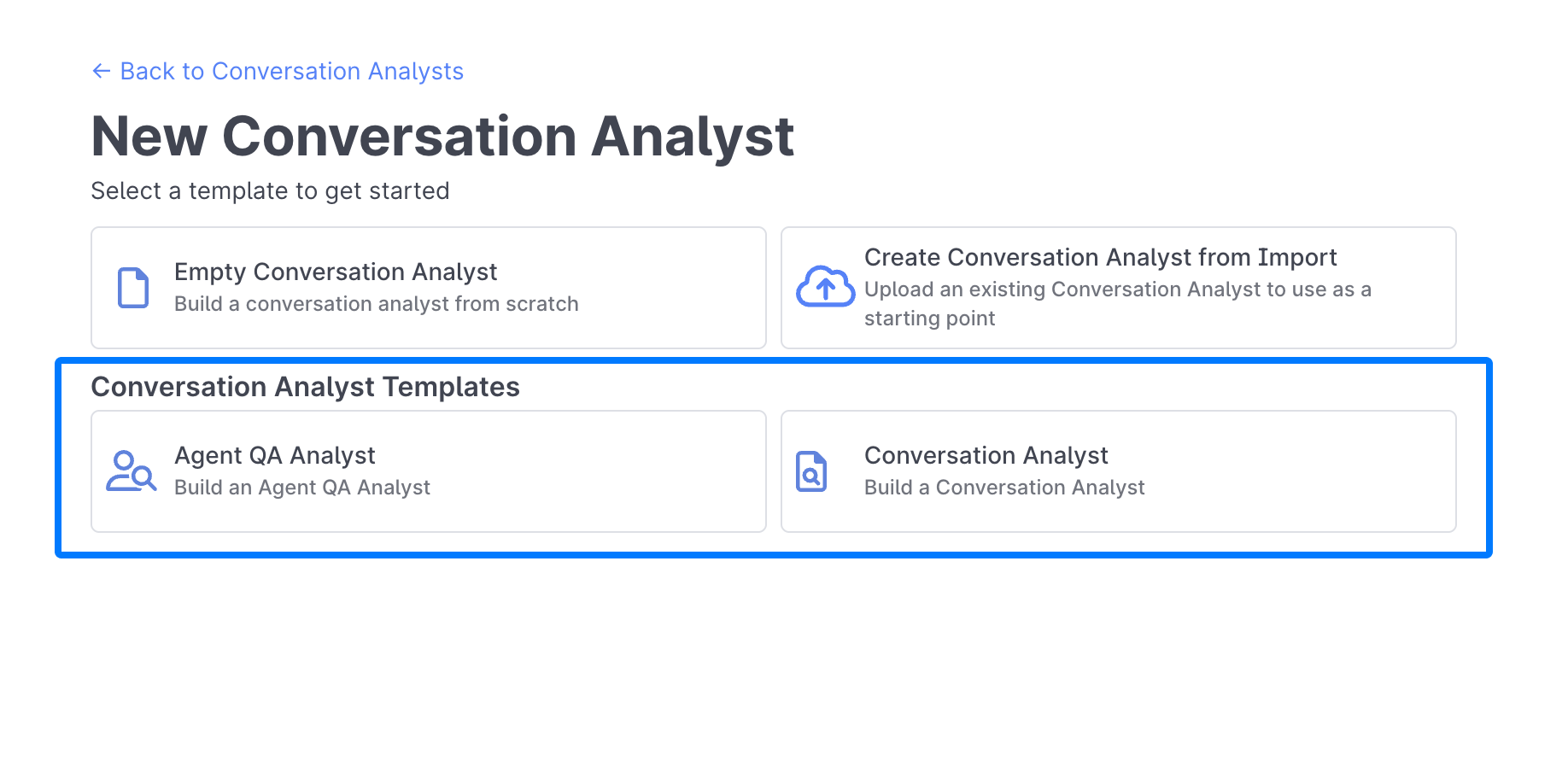

Conversation Analysts are created and managed like other AI Studio Projects, and can be created using the New Project button, or via the Conversation Analysts tab:

Starting with a Template

Conversation Analysts have a series of templates you can use to get started with, learn more about the available Conversation Analyst Templates.

Starting from Scratch

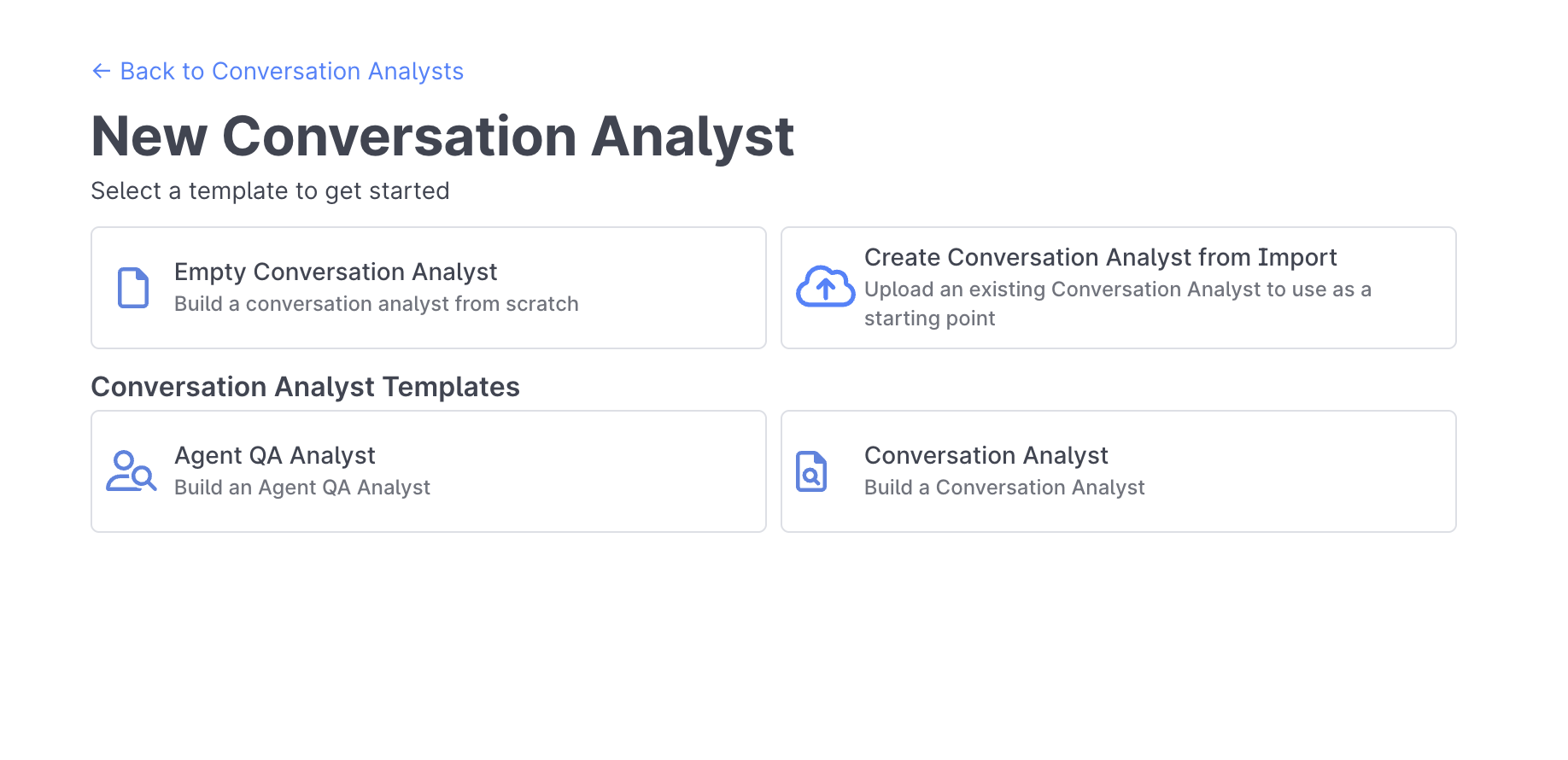

Select the Empty Conversation Analyst option if you'd like to build one from scratch:

Building a Conversation Analyst

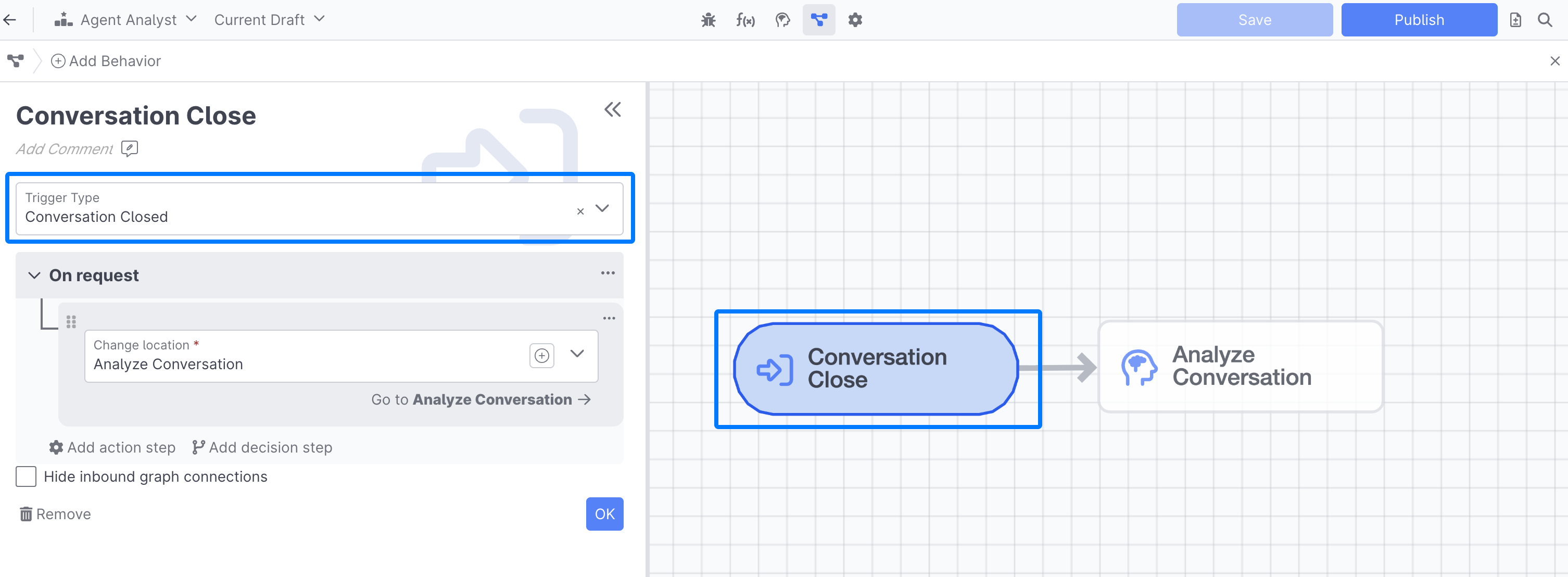

Trigger

Conversation Analysts, like all other AI Studio projects, start with a Trigger Behavior that initiates the Conversation Analyst. Currently the Trigger Behavior supports Conversation Closed as a Trigger Type:

You can add conditional logic or other actions to this Behavior, but often what you'll want to do is transition to a Prompt Behavior.

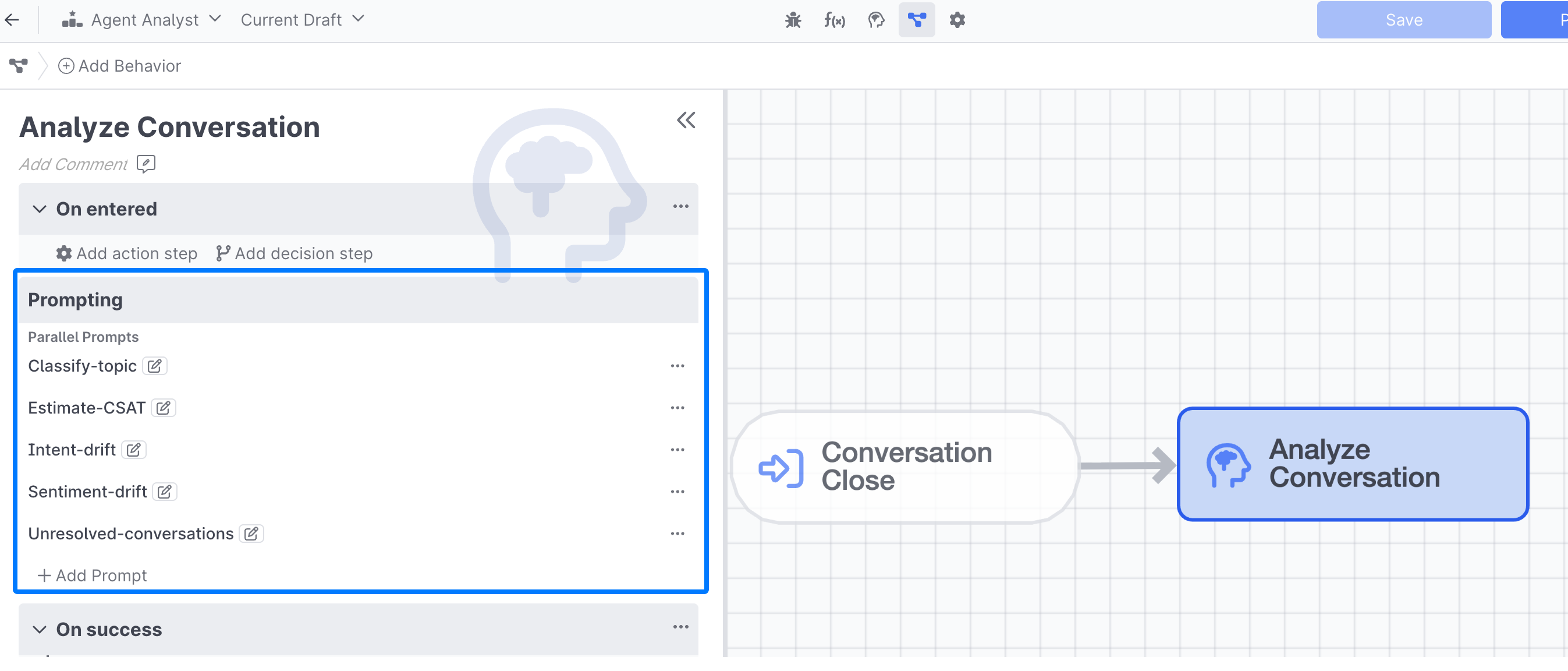

Building your Prompts

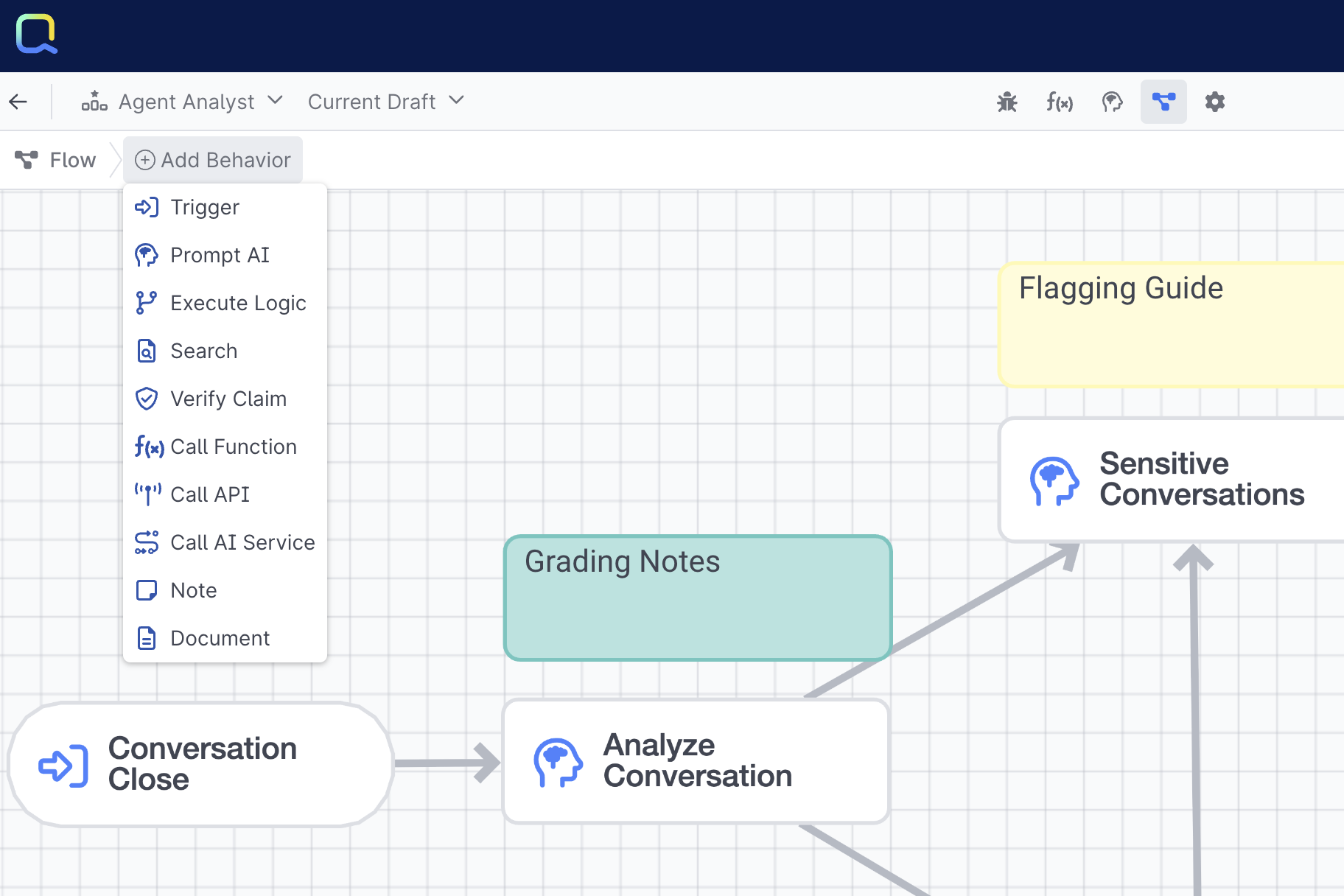

Once you add your Prompt Behavior, either via the Add Behavior button, or directly from the Trigger behavior, you can begin building your prompts.

Because Quiq enables you to run multiple prompts in parallel in a single Prompt Behavior, you can create prompts for all of the metrics you're interested in tracking in a single step:

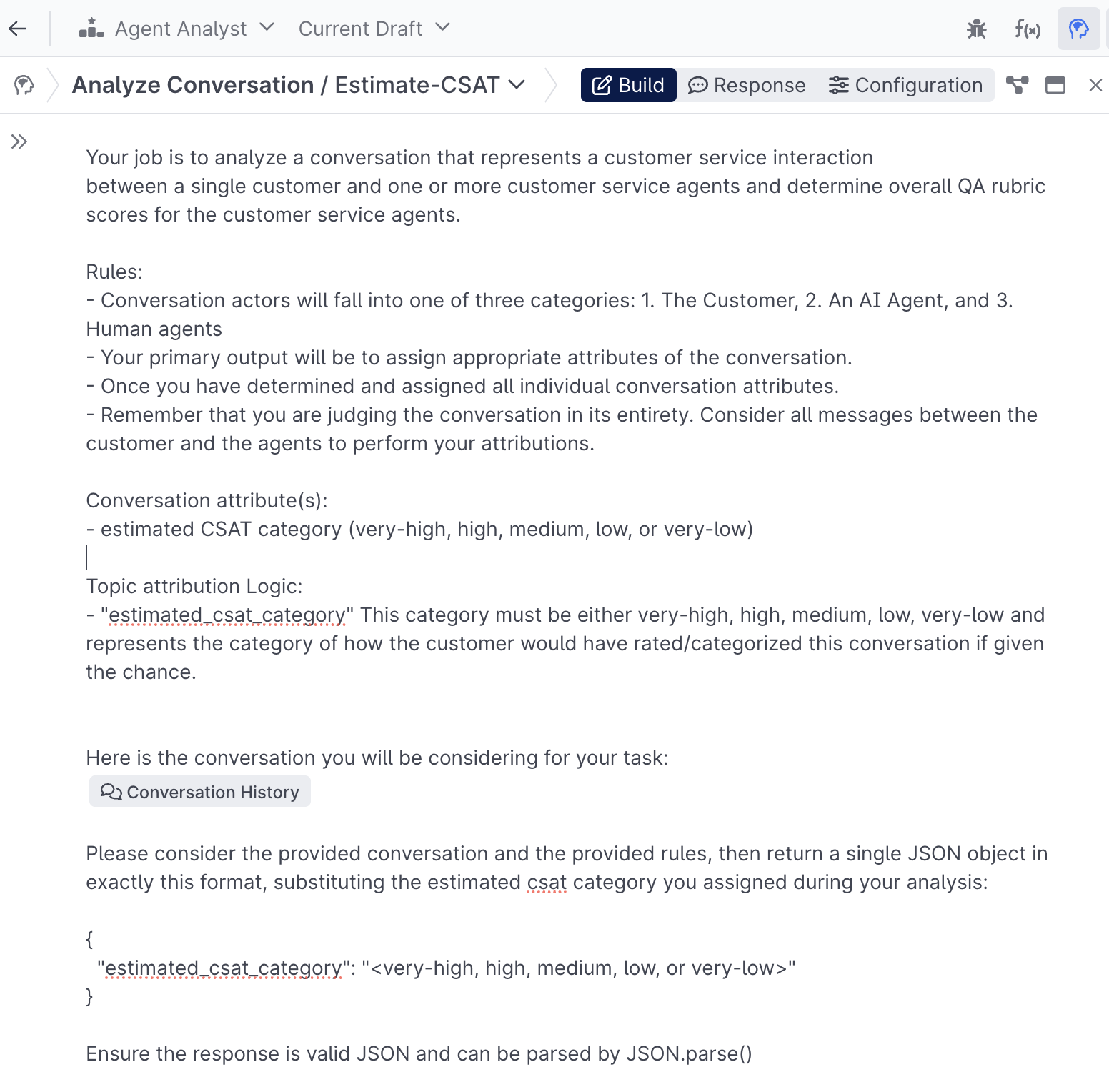

Your prompts can be written in natural language and should describe in detail how you'd like your Analyst to score the conversation:

Example PromptsYou can easily create Conversation Analysts using the Conversation Analyst Templates available to see real prompts.

You can also view a host of example prompts you can copy and paste, as well as learn some more best practices, in the Sample Conversation Analyst Prompts section.

Formatting prompt responses

You can read more about different prompt formatting responses here

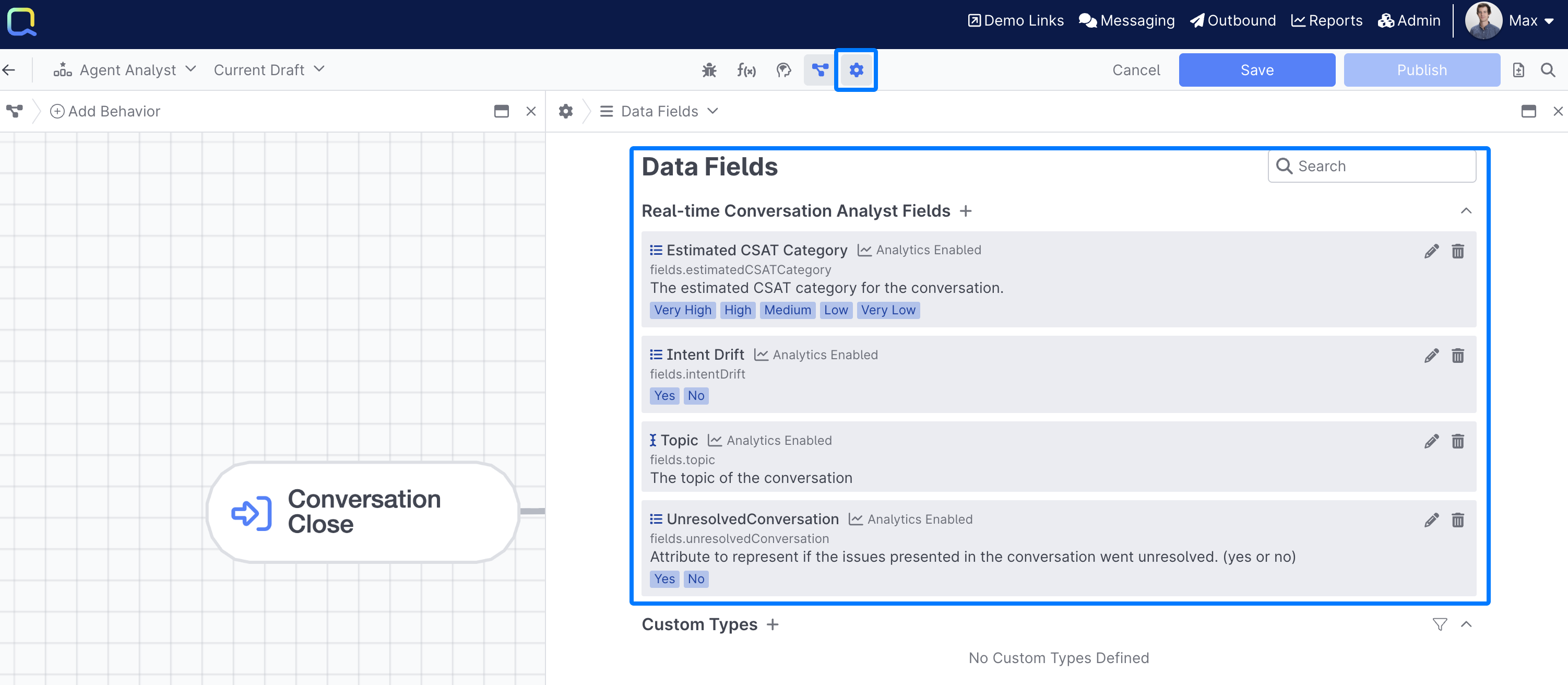

Creating Fields

Once you've got your prompts created, you'll need a way to store your prompt completions. You can do this either by creating new Conversation Analyst specific fields via the Fields option in the Configuration Panel, or by leveraging new or existing Conversation Custom Fields, that map to each of the prompts you're creating:

If you do create Data Fields within your Conversation Analyst, ensure you've selected the Include In Analytics option, by default Data Fields created within a particular AI Studio Project are not available in analytics.

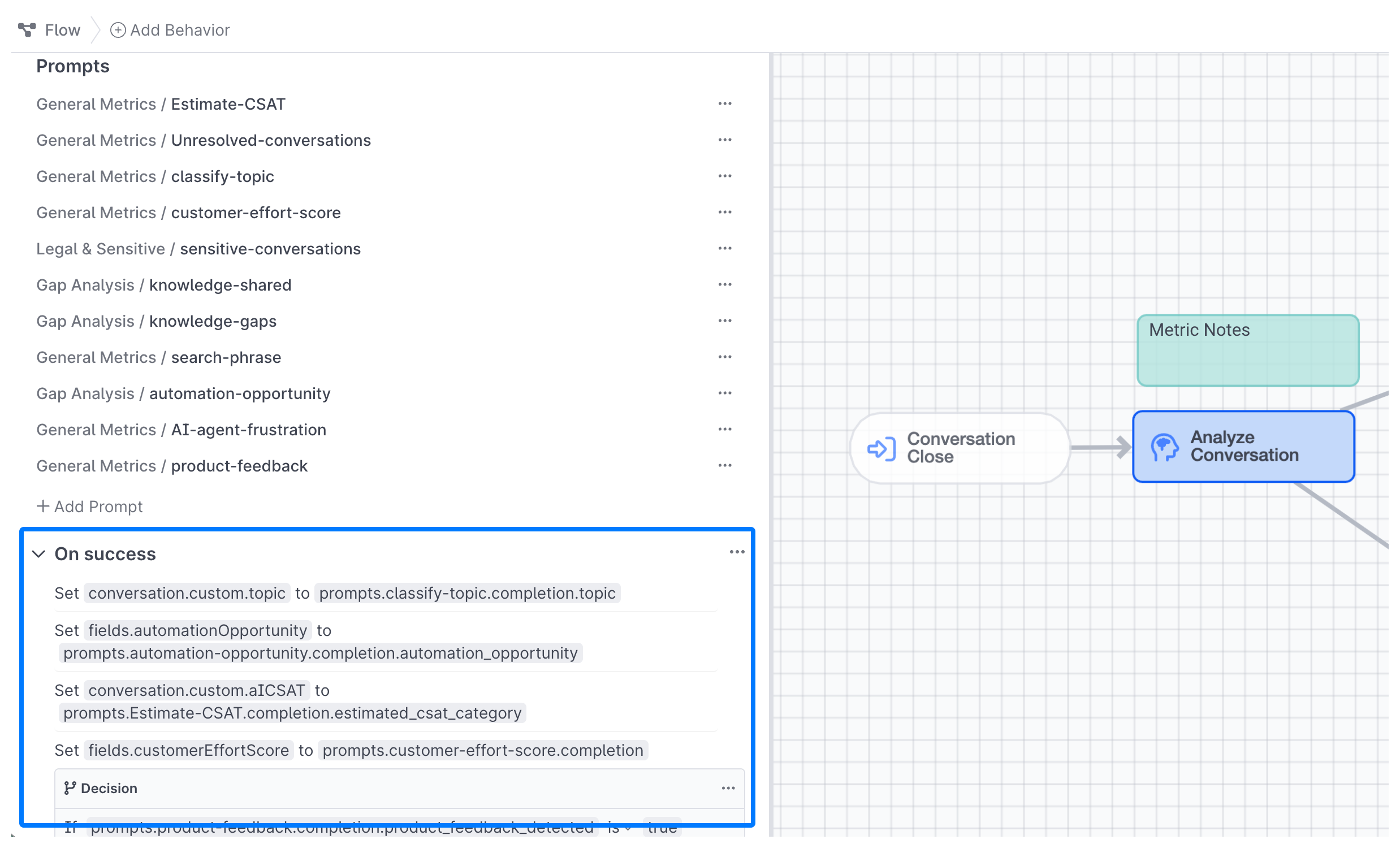

Setting Fields

Once you've got your Fields created, you can set them either in the On Success portion of your Prompt Behavior:

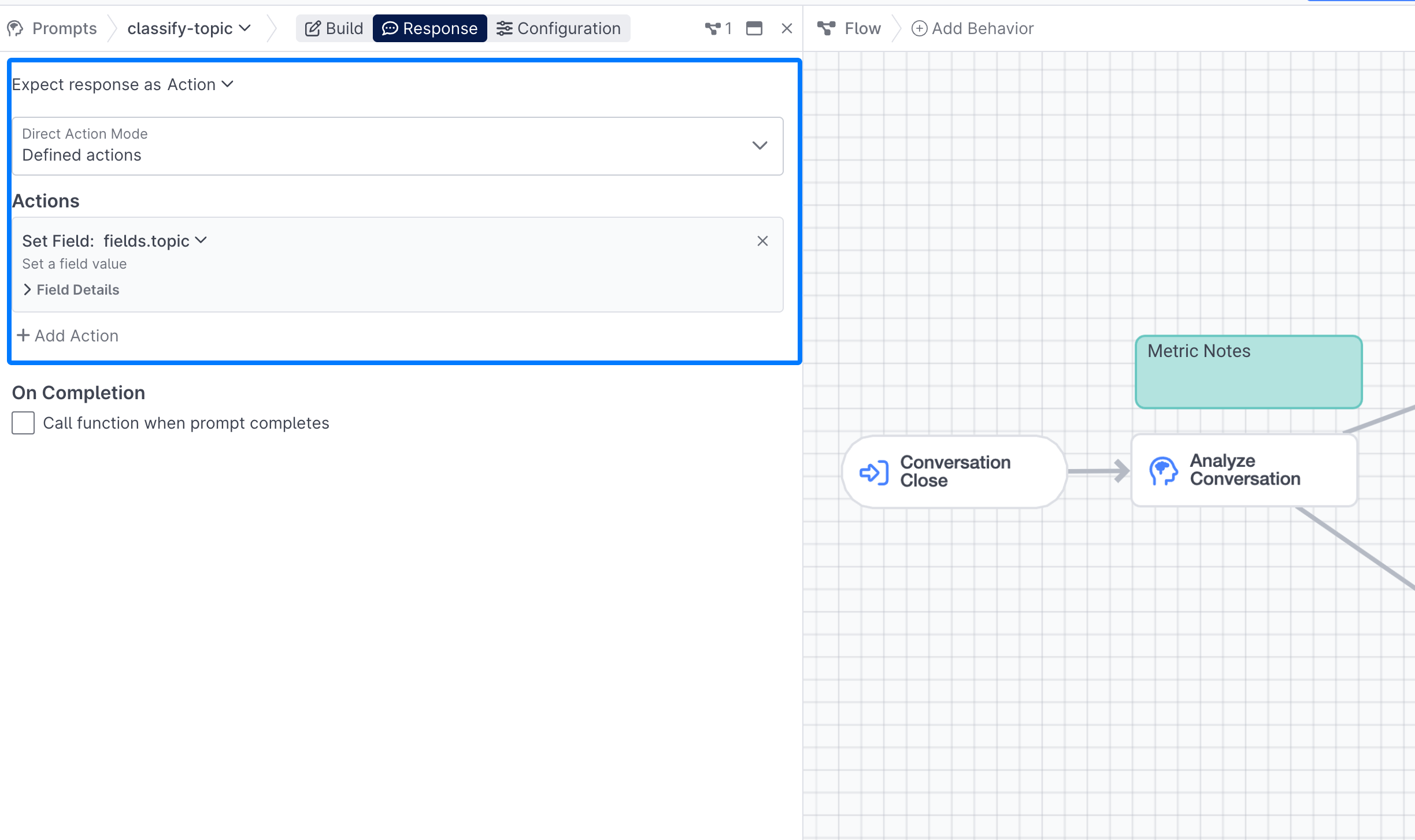

or in the Response tab of a Prompt by selecting Expect response as **Action**, selecting Defined Actions and then Adding a Set Field action :

Generating Human Agent Metrics

In addition to conversation level metrics that can be integrated into other Insights reports, your AI agent has the ability to create metrics that can be easily integrated into your Agent Insights reports. These prompts can be built using the same natural language as your conversation level prompts, but require a slightly different setup if you'd like them to appear in Agent Insights.

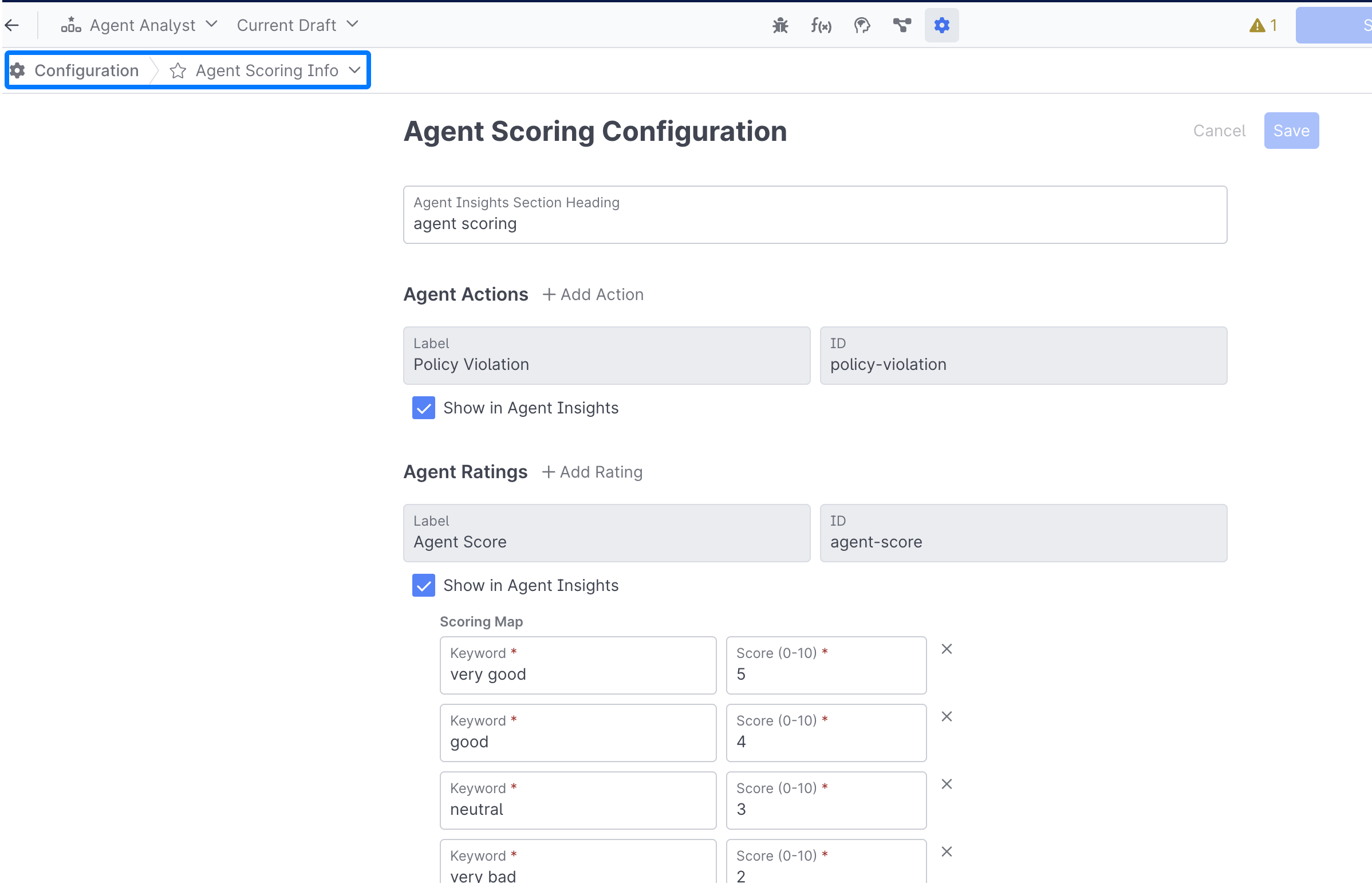

To get started, navigate to Agent Scoring Info in the Configuration Panel:

From here, you can create a name for the Agent Insights section that your AI generated metrics will appear under:

Types of Human Agent Metrics

There are two types of Human Agent Metrics your agent can generate:

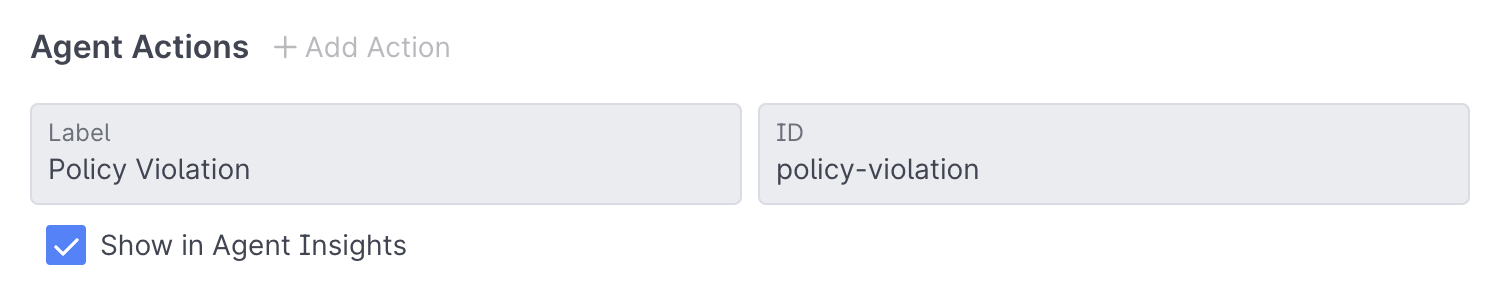

Agent Actions: Which are boolean, and are a great for confirming whether or not a particular action was performed by the agent (proper greeting, gave inaccurate information, etc)

Agent Actions consist of a Label, an ID, and a checkbox for whether or not you want that action to appear in Agent Insights:

It might seem strange to not include a metric in Agent Insights, but because your Conversation Analyst can take other actions, like call APIs or AI Services, you want to take some other action if you notice an agent violating a policy, like generating an email, sending a Slack or Microsoft Teams message, or something else.

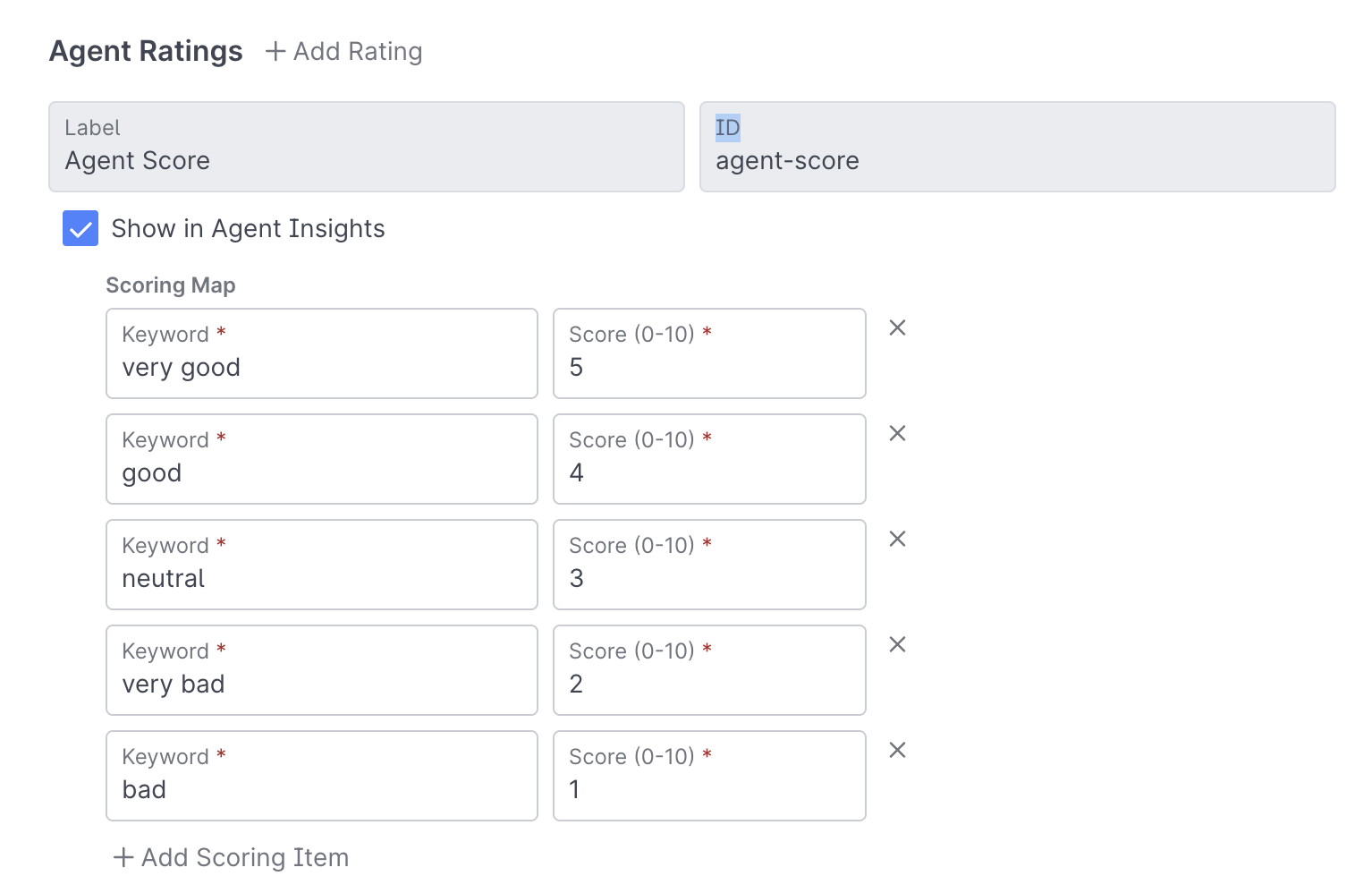

Agent Ratings: Agent ratings are 0-10 score ratings that are great for grading agents on your specific criteria, like whether they used the proper greeting, how closely they adhered to a given policy, and much more:

Agent Ratings consist of both a keyword, and a score.

Configuring your Prompts for Human Agent Metrics

In order to properly attribute a score or action to a particular agent, you'll need to configure a couple things within your prompt:

Conversation History Block

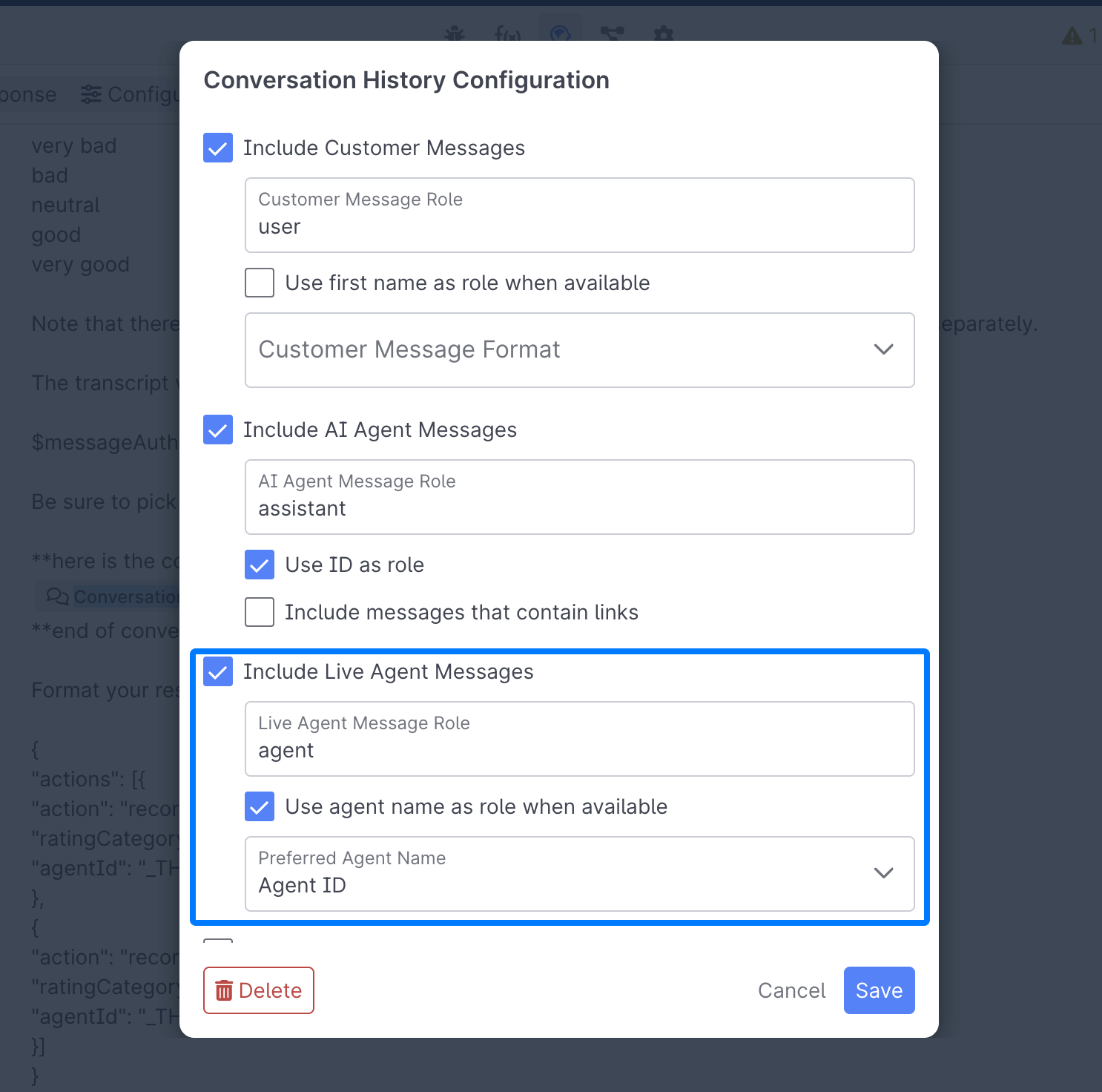

When generating Agent Insight metrics you'll need to ensure that live messages are included, and that you're using the Agent ID - this will ensure your agent is able to properly attribute a score to each agent:

Check the "Include Live Agent Messages" Checkbox, and ensure that you use the Agent ID as the preferred agent name.

Formatting your Prompt Response

At the bottom of your Prompt, you'll need to define how your prompt response should be formatted. You'll want to do something similar to the following, with your particular agent rating, or agent action, replacing the ratingCategory, rating, and agentAction sections:

Format your response in our proprietary json action declaration exactly like this:

{

"actions": [

{

"action": "recordAgentRating",

"agentId": "AGENT_001",

"ratingCategory": "overall-rating",

"rating": "good"

},

{

"action": "recordAgentAction",

"agentId": "AGENT_002",

"agentAction": "violated-policy",

"reason": "Failed to verify customer identity before providing account details"

}

]

}

Prompt ResponsesYou can see more examples of different prompt responses in the Sample Conversation Analyst Prompts section.

Handling your Prompt Response

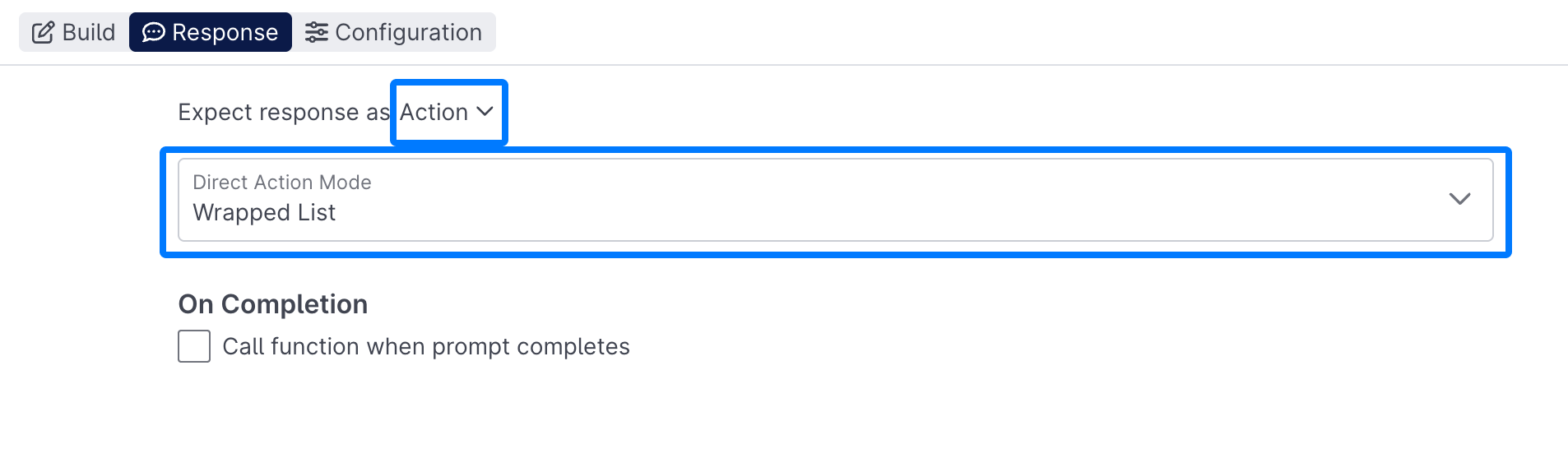

Under the Response tab, you'll want to select Action for the expected response, and Wrapped List for the mode:

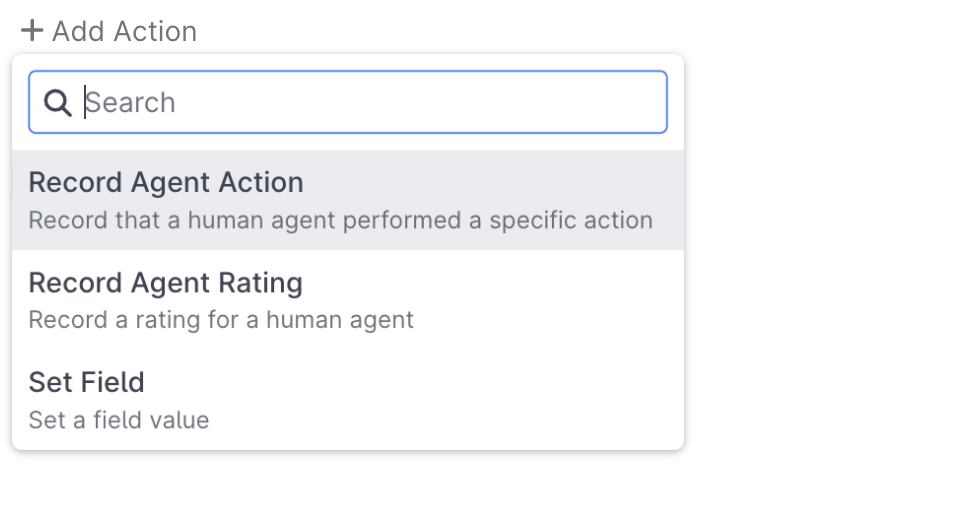

Next select the Add Action button and select either Record Agent Action or Record Agent Rating from the dropdown:

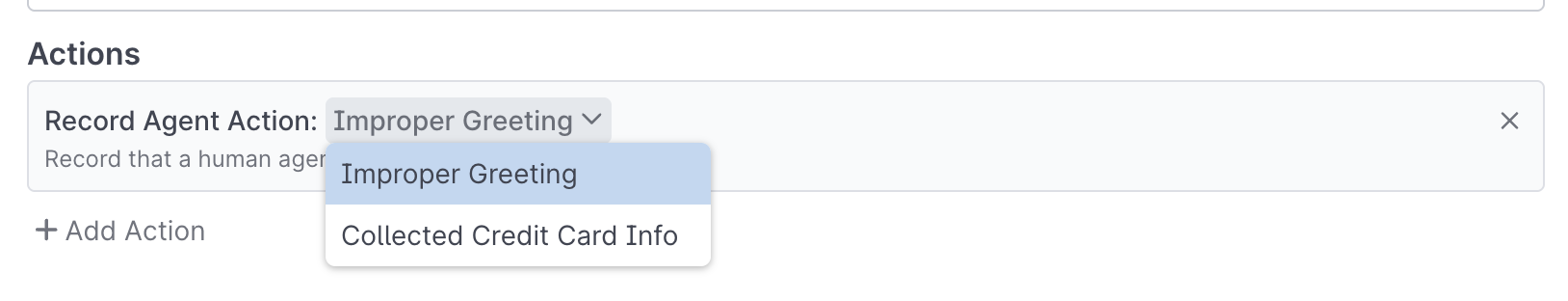

Then select the action or rating you'd like to record:

Other Actions

Because they're built in AI Studio like all other project types, Conversation Analysts aren't just limited to generating metrics, they also have access to a range of other important behaviors:

Search Behavior : Enables them to leverage AI Resources just like other project types to identify knowledge that could have been shared, or to look for gaps in existing knowledge.

Execute Logic : Enables the Analysis to conditionally generate certain metrics or take certain actions, for example only using an open ended topic prompt if the default Topic prompt couldn't find a match.

Verify Claim : Allows your Analyst to corroborate claims made by an AI Agent or human agent, or corroborate it's own Search results and findings.

Call API : Call of to an external API when specific conditions are met, whether that's updating a record or case in an external system, or notifying your compliance team of a conversation where a particular policy was discussed.

Call AI Service : Enables the Conversation Analyst to tap into an existing or new AI Services to perform some workflow, like triggering an email or Slack message.

Testing & Debugging

Learn how to test your Conversation Analyst, analyze and tweak prompts, and create reusable tests.

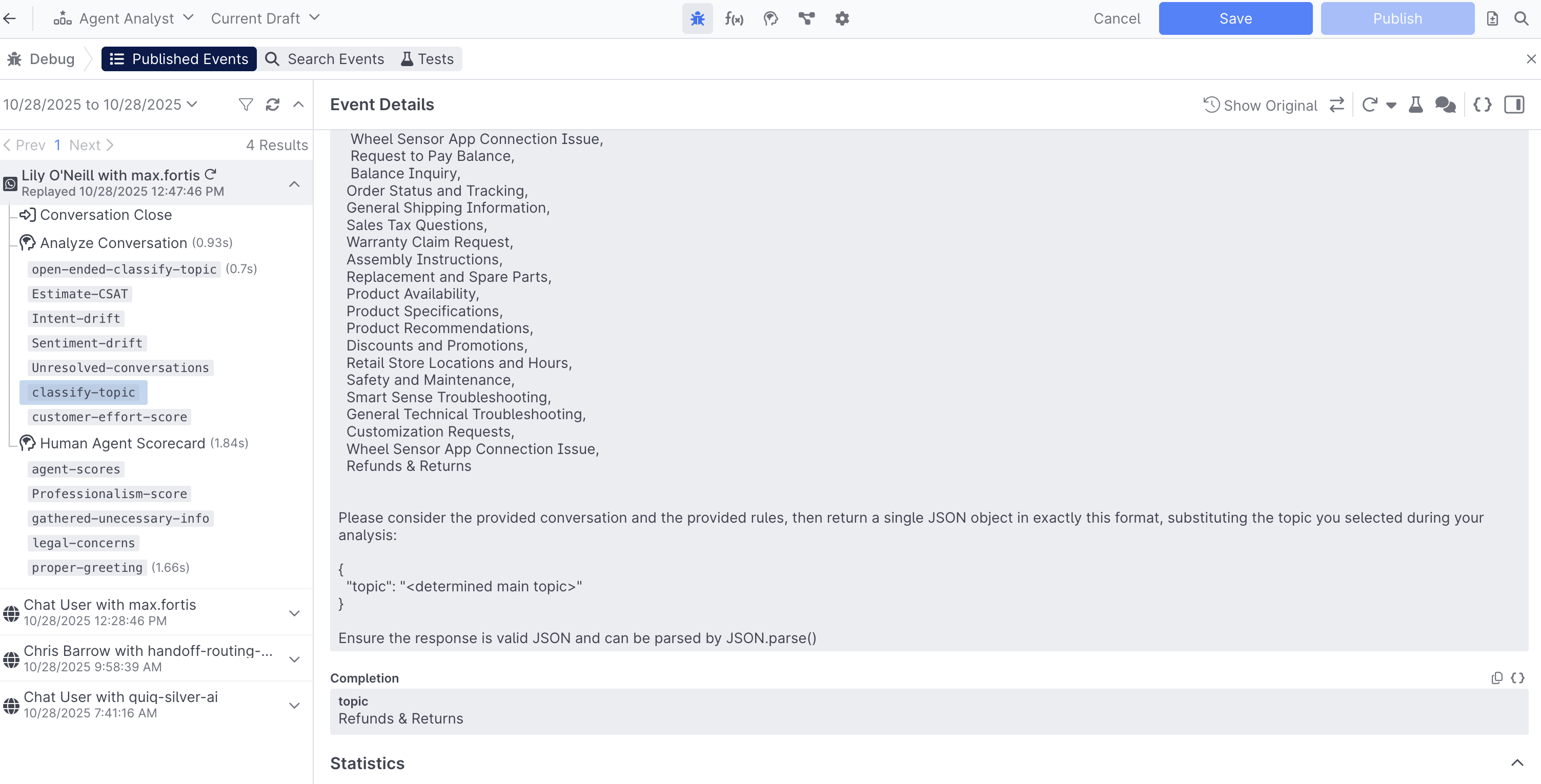

Search Events

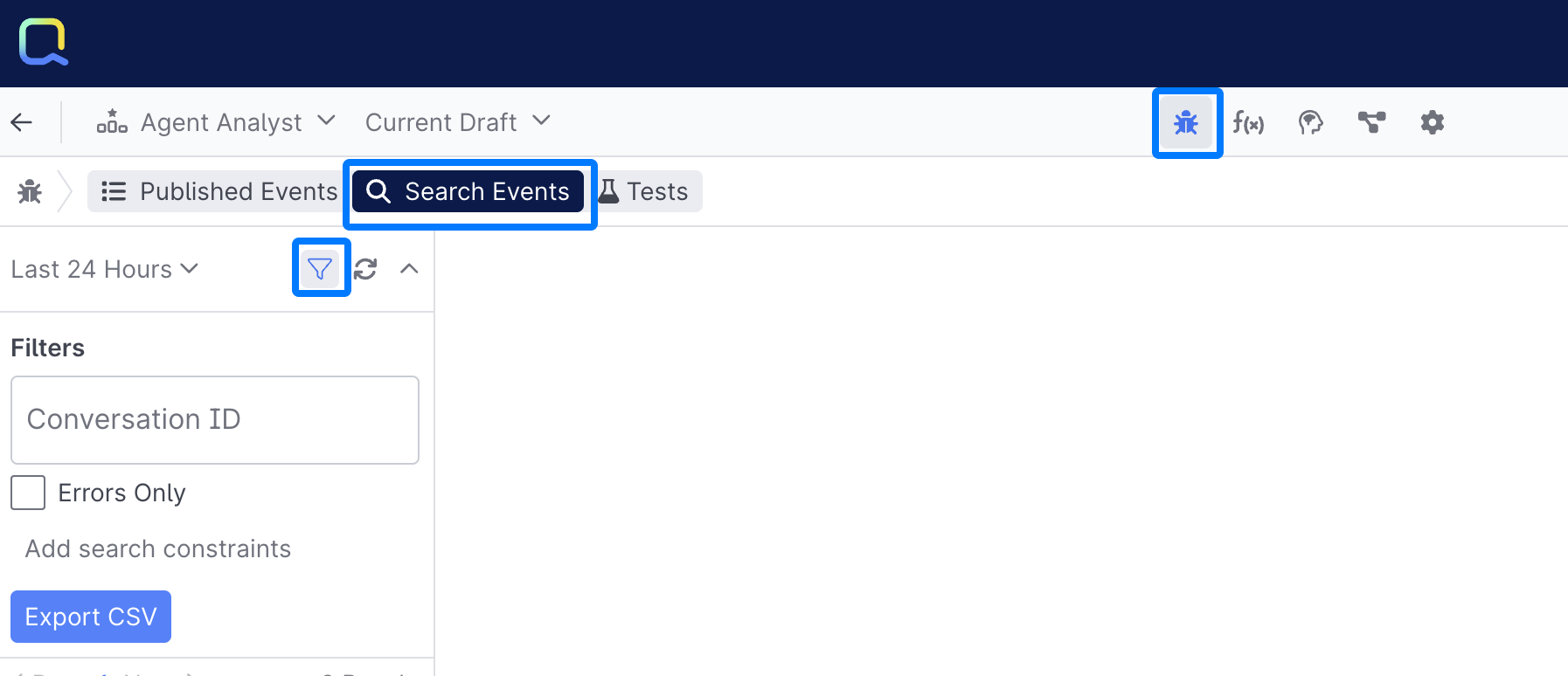

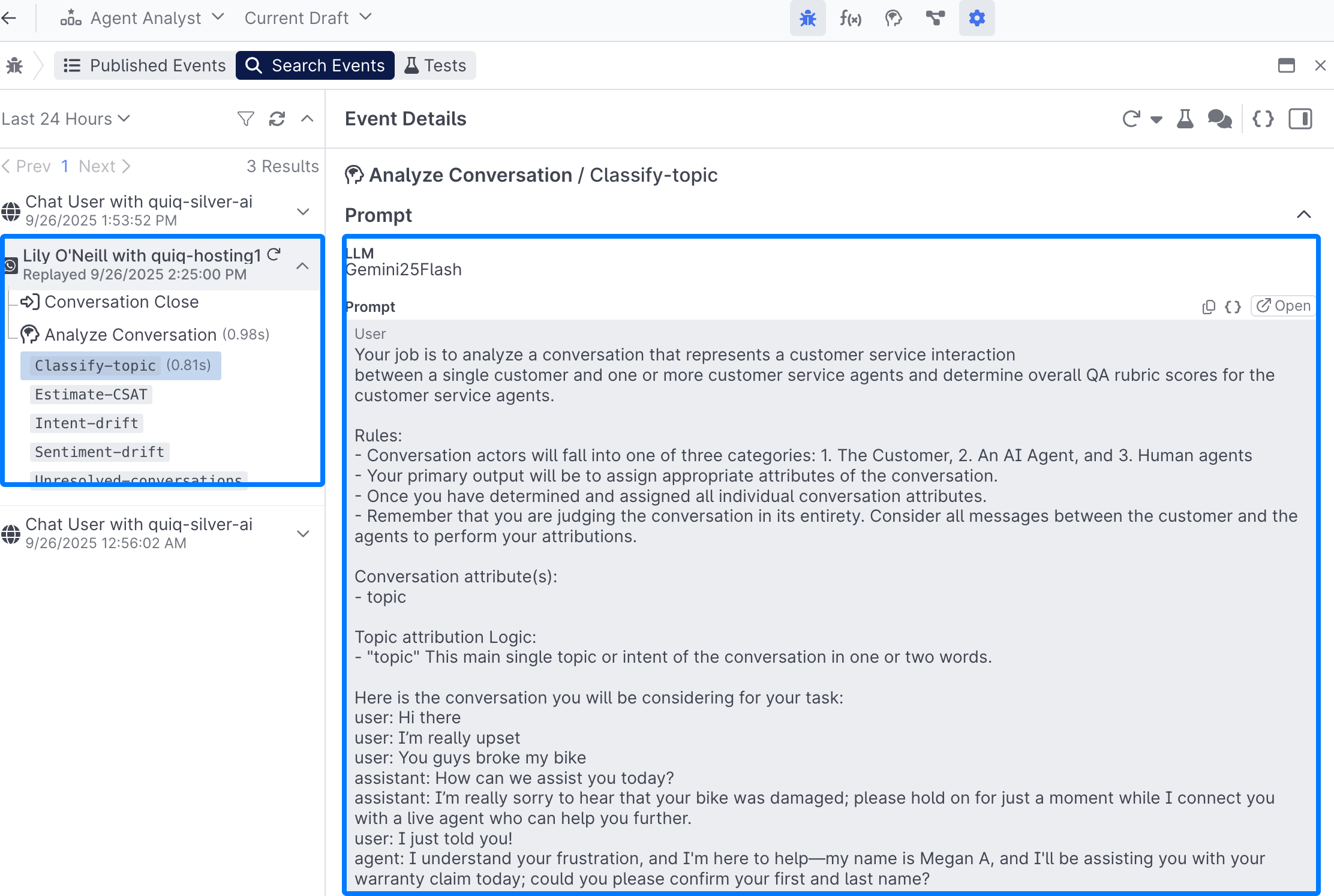

Now that you've got your Conversation Analyst successfully configured you'll want to run it against real conversations to see how it analyzes them. Open up the Debug Workbench and click Search Events, next open the Filter icon to search by a Conversation ID (CID):

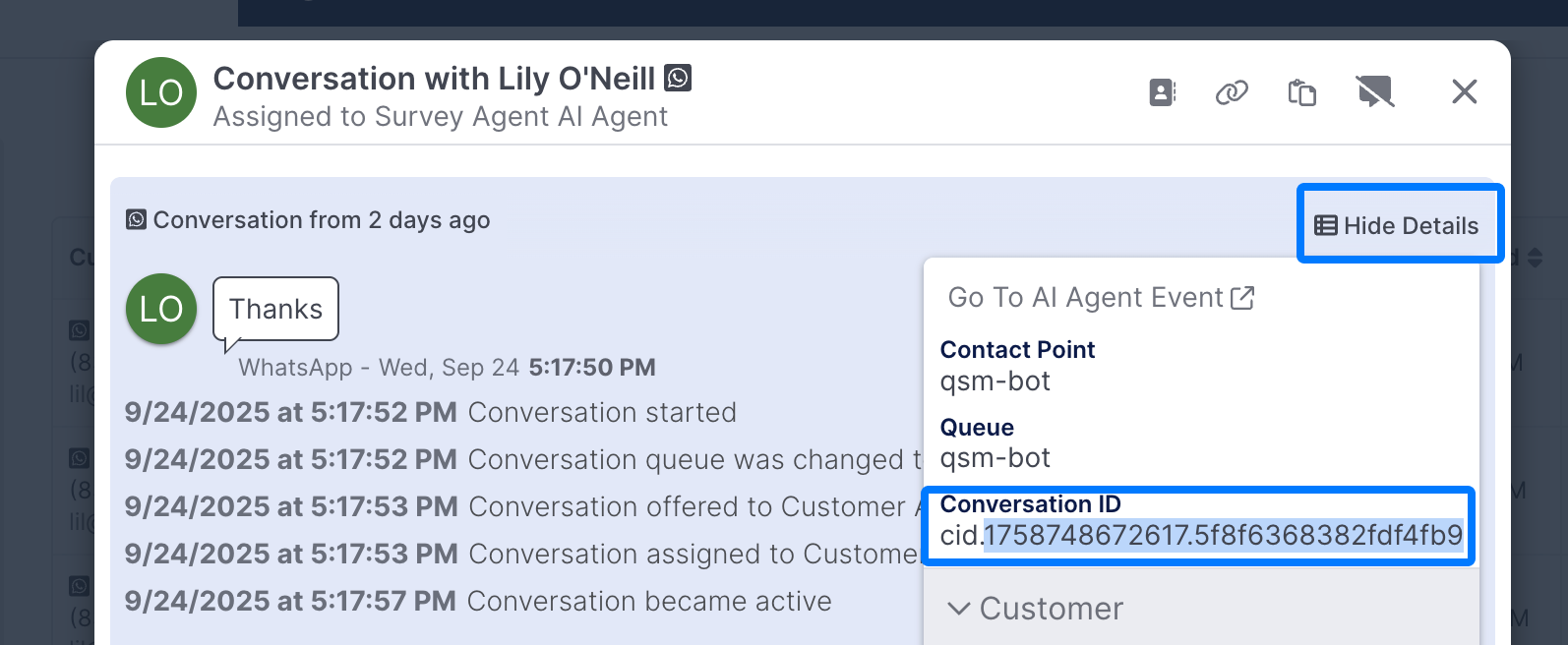

Finding a Conversation IDYou can easily grab CIDs by opening up a Transcript and selecting the View Details button next to a conversation:

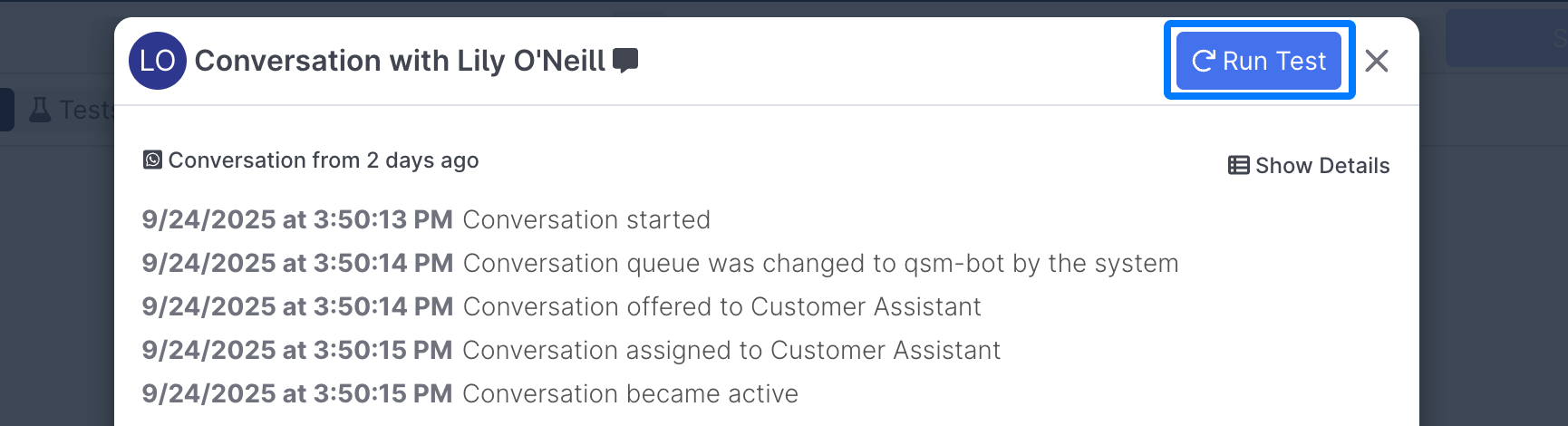

Once you've done that, the conversation will show up in the event list, open it up and click the Run Test button:

Now you can see how your Analyst is behaving on real conversations without having to set it live:

Debug

Once your Conversation Analyst is live, you'll see allreal conversations show up and you can review each prompt and prompt completion to ensure your analyst is behaving as expected. Learn more about Debug Workbench.

Test

Test sets aren't currently supported

Deploy

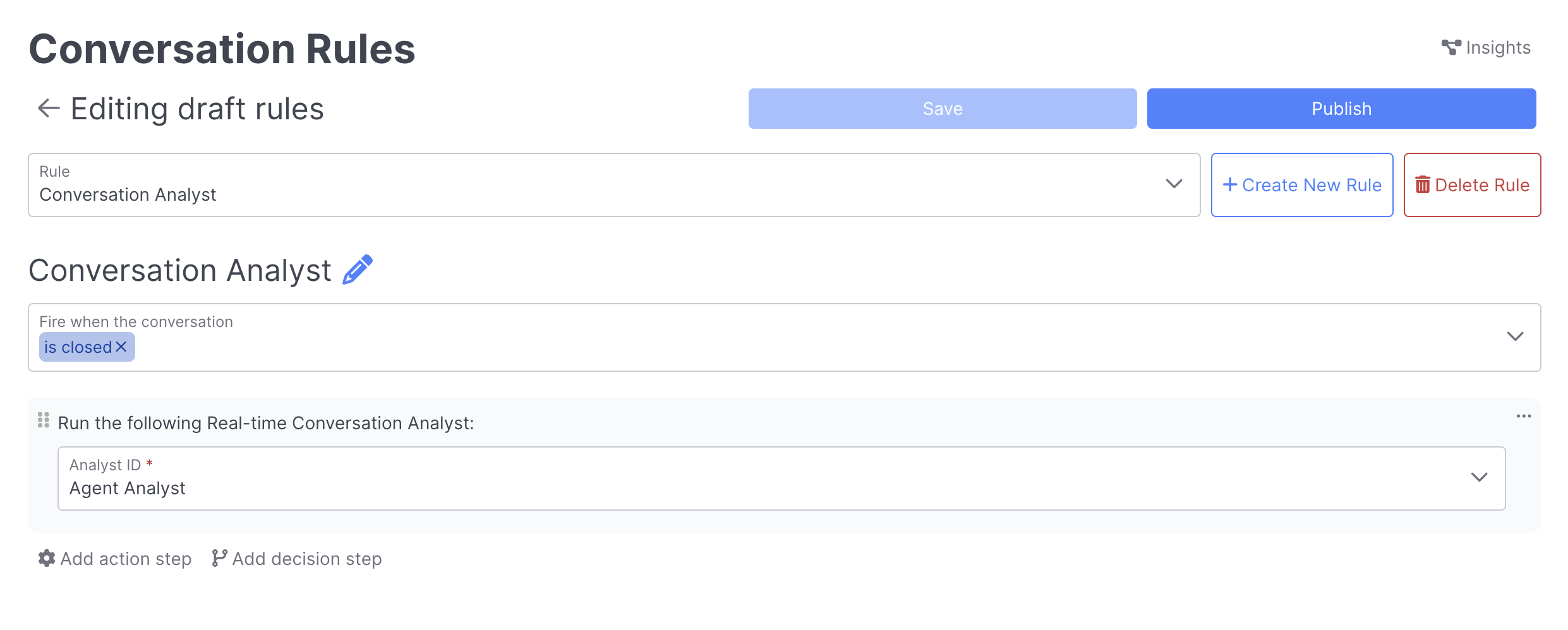

Once you've saved and published your Conversation Analyst, you can set it up within Conversation Rules. Conversation Analysts run when a conversation closes, and you can add additional logic or criteria to your rule:

Currently, a Quiq employee must add the Run Conversation Analyst action.

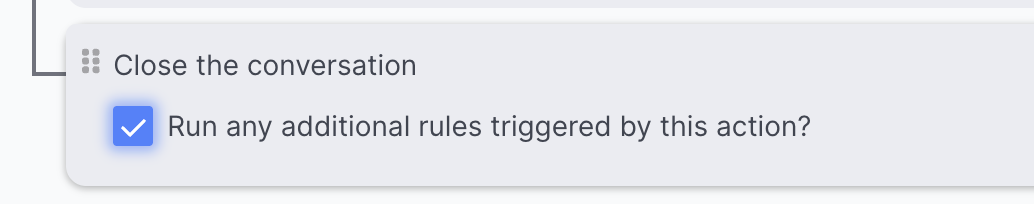

If you notice your AI Analysts aren't running, you may need to audit your Conversation Rules and confirm that anywhere you use the Close the conversation action, you checked the Run any additional rules triggered by this action checkbox

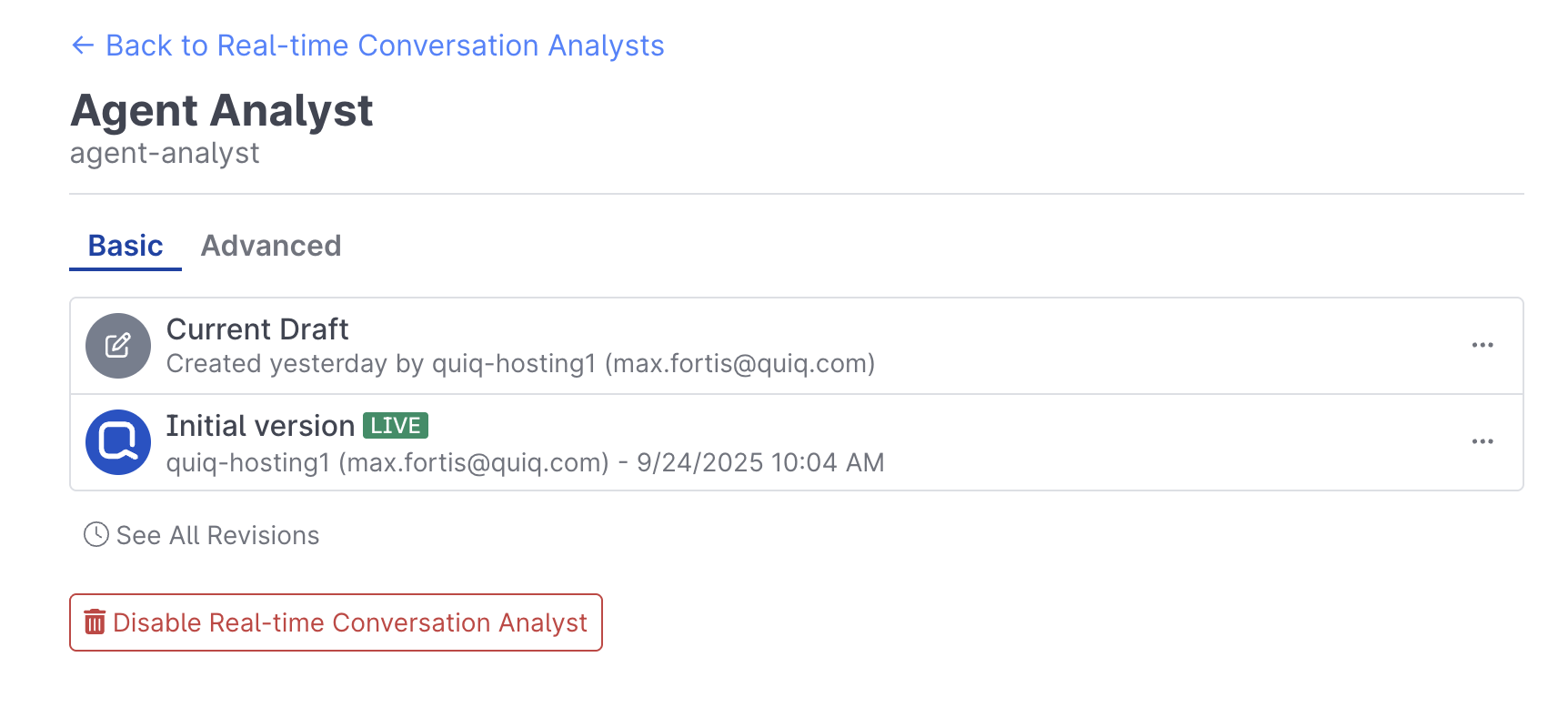

Publishing and Versioning

Analysts are published and versioned just like other project types, and can be exported and imported as desired:

Measure

Once you've got your AI Analyst up and running, you can leverage the metrics it generates across Quiq Insights.

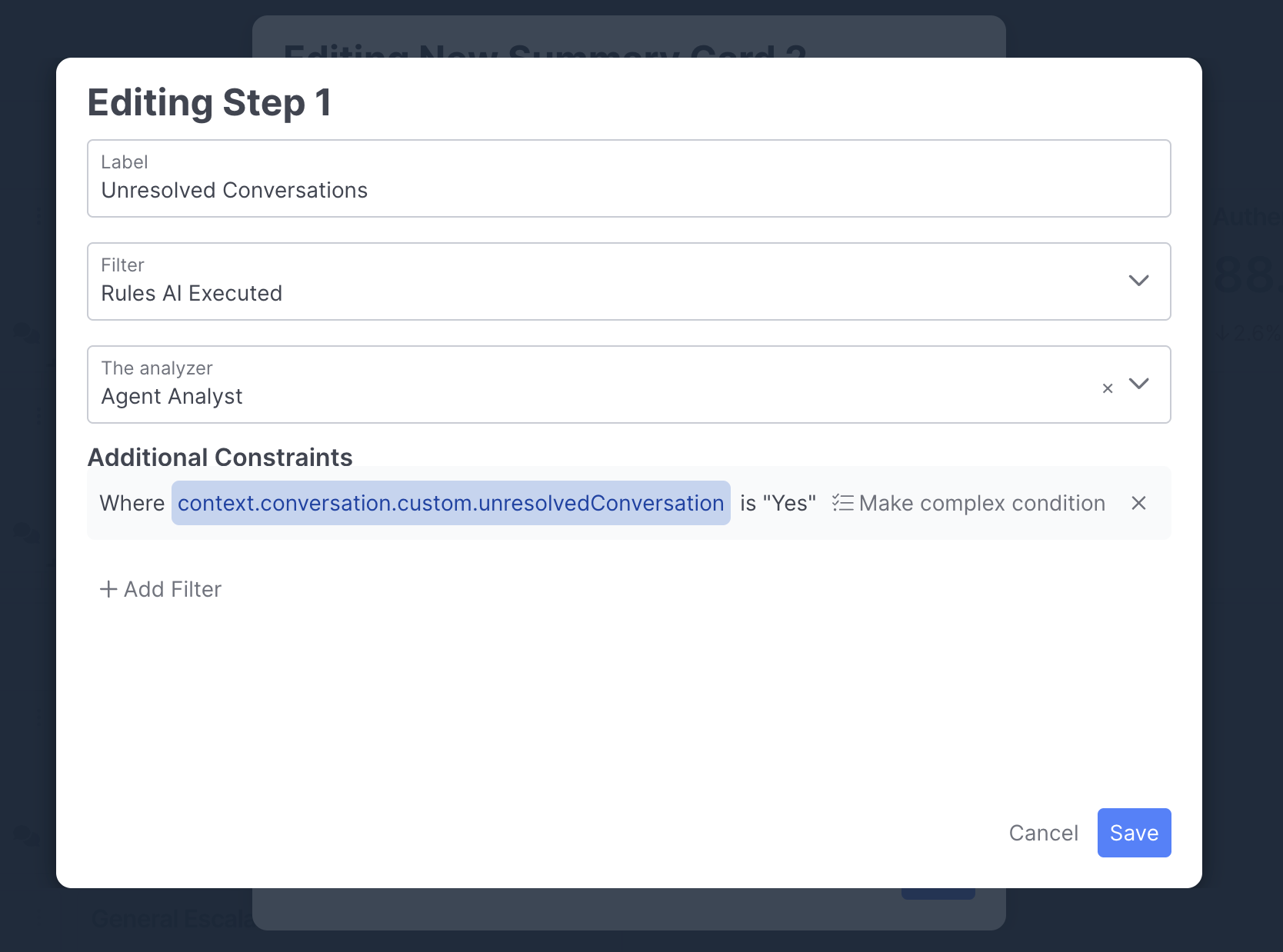

The fields that you've set can be easily integrated into your Cards, Funnelsand more . Simply Filter based on Rules AI Executed, then select the Real Time Conversation Analyst, and the filter based on the Field you'd like to report on:

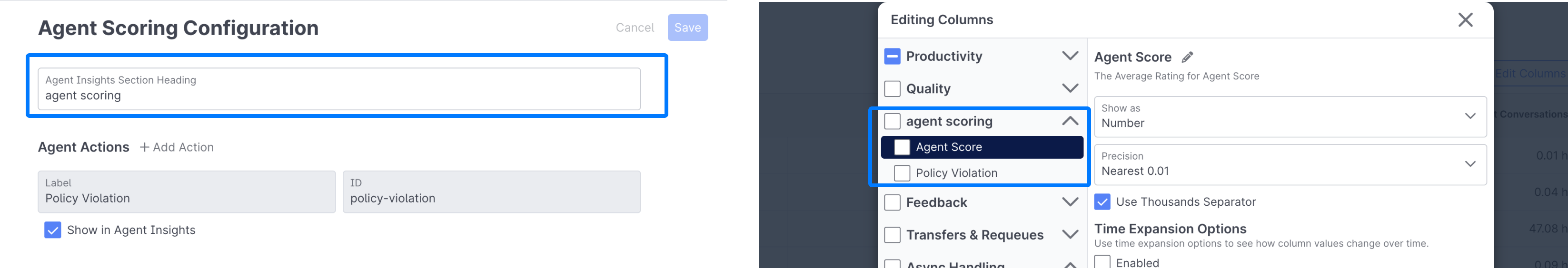

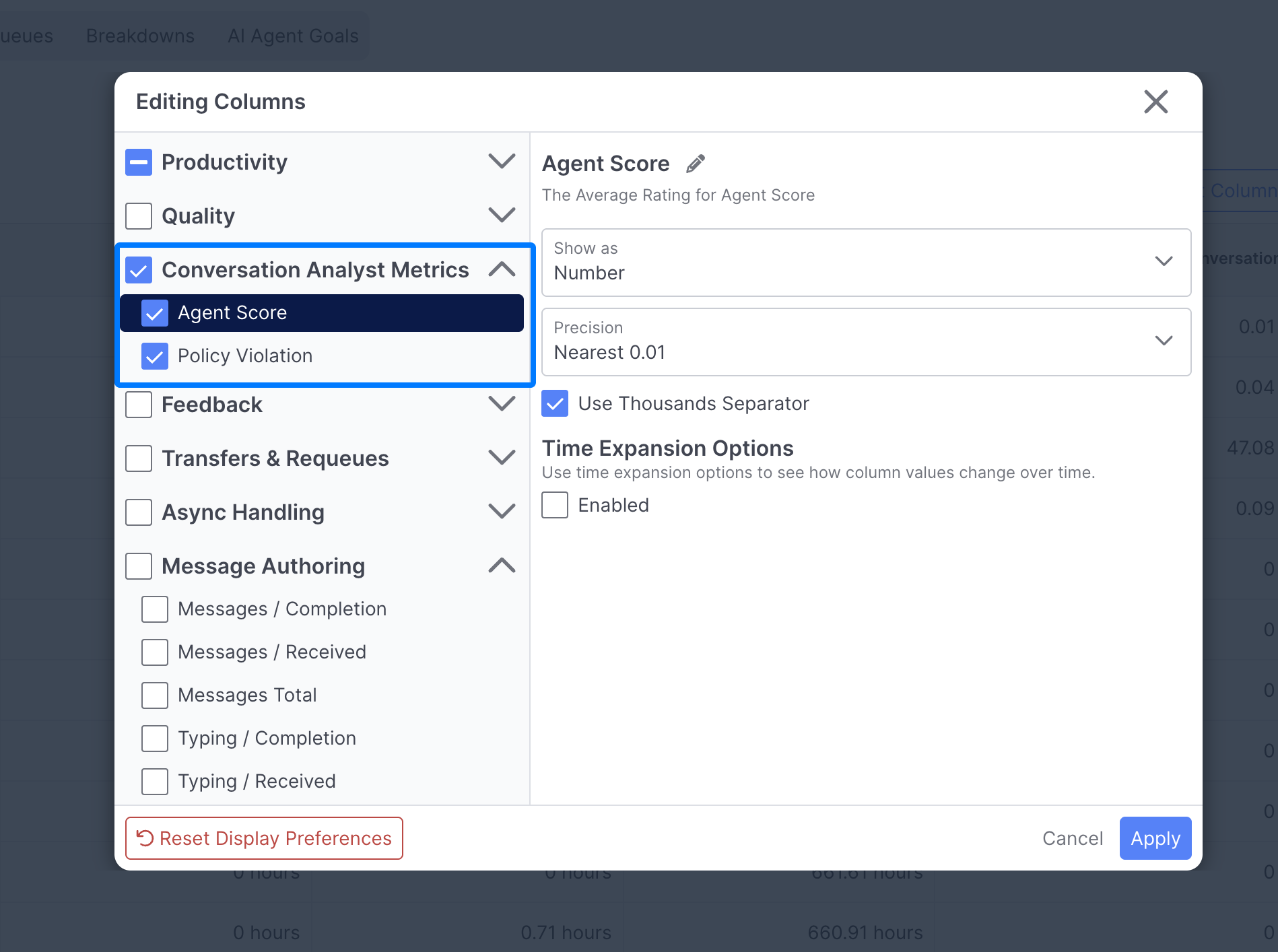

To integrate your scores into Agent Insights, ensure you've followed the steps outlined in the generating human agent metrics section. Once configured correctly, you will see them appearing as columns in your Agent Insights views:

Updated 5 days ago