Lesson 4: Knowledge Assistant

Overview

This assistant builds on Lesson 3 by adding more pre and post-generative guardrail prompts (message-sensitive, answer-tone) and adding an essential Claim Verification (fact checking) step to ensure that generative content is substantiated by retrieved data.

This assistant is grounded in QuiqSilver Bikes - a fictitious retailer of Cannondale bikes.The AI assistant on the QuiqSilver website uses flows & techniques that are similar to what's covered in the Academy lessons.

Video Walkthrough

What You'll Learn

This lesson is designed to present a more fully fleshed out knowledge assistant within AI Studio. If you haven't completed Lesson 3 please complete that first.

In this lesson you'll learn how to:

- Understand post-generation guardrails, and how you can leverage a range of techniques to check for accuracy and tone before sending your message

- Understand the Verify Claim behavior, and how it helps ensure accurate responses

In this section you'll find a written guide you can follow along with. You can view walkthroughs of all the available lessons here.

For help getting started and creating a template, refer to the intro guide .

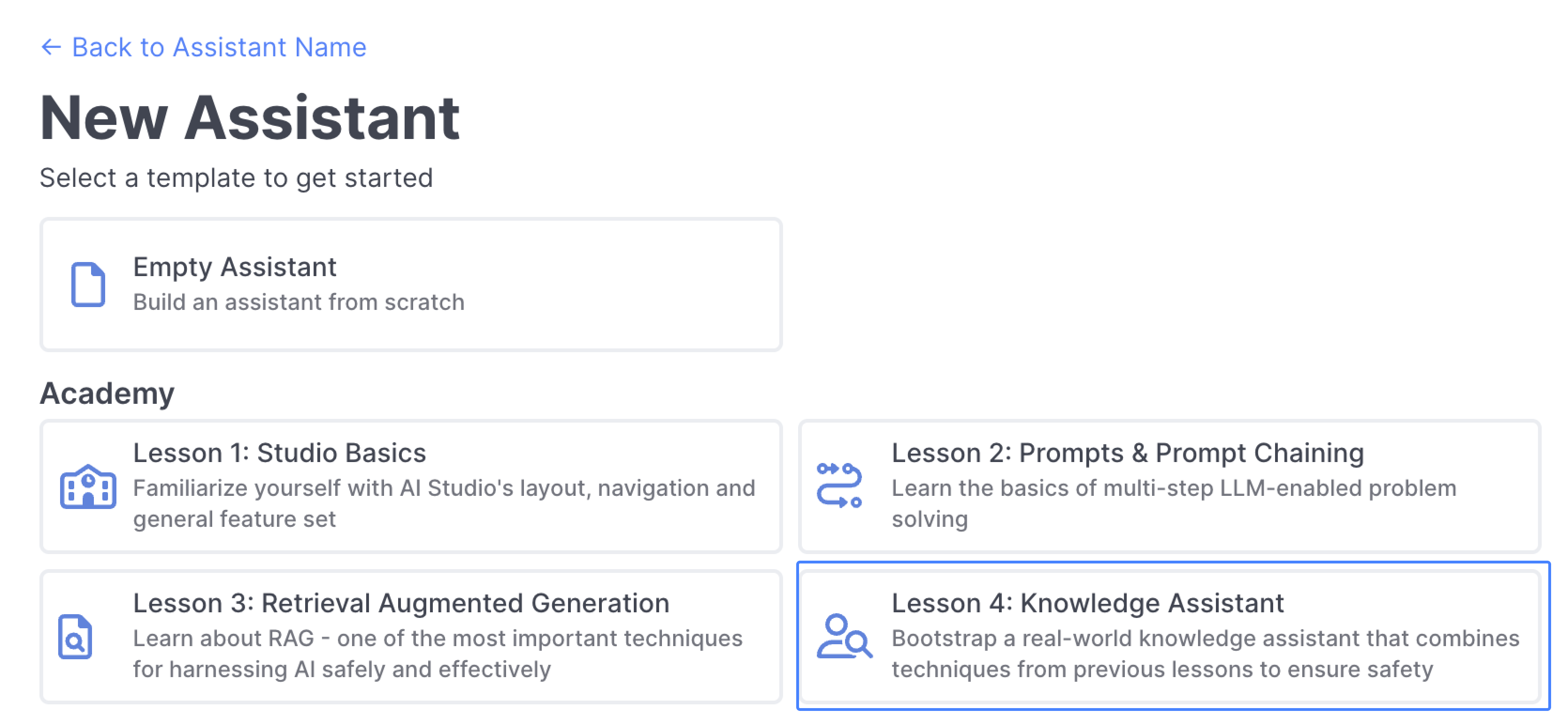

Select the Template

Select Lesson 4: Knowledge Assistant to get started:

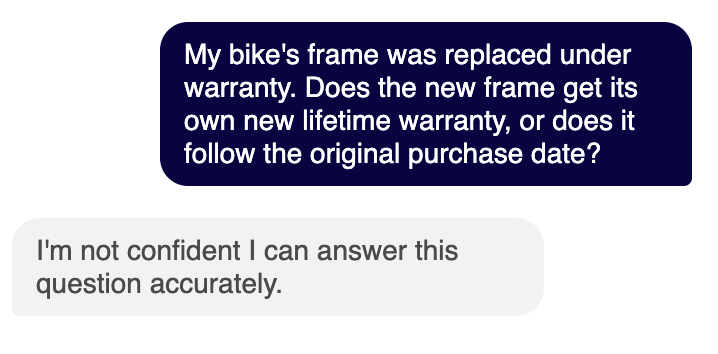

Testing your Assistant

Enter the same phrase you used in Lesson 3 that the assistant generated an answer for: "My bike's frame was replaced under warranty. Does the new frame get its own new lifetime warranty, or does it follow the original purchase date?" You'll see now that your assistant does not answer the question like it did in lesson 3:

Answer VarianceYou may find your agent sends a response back like "I'm unsure, please reach out to your local QuiqSilver bikes location for more info". This sort of phrase will often make it past the verify claim because the agent is not making a claim that is not substantiated by the content it's referencing.

The verify claim step is really designed to help prevent the assistant from generating plausible sounding answers that are not grounded in the data it has access to.

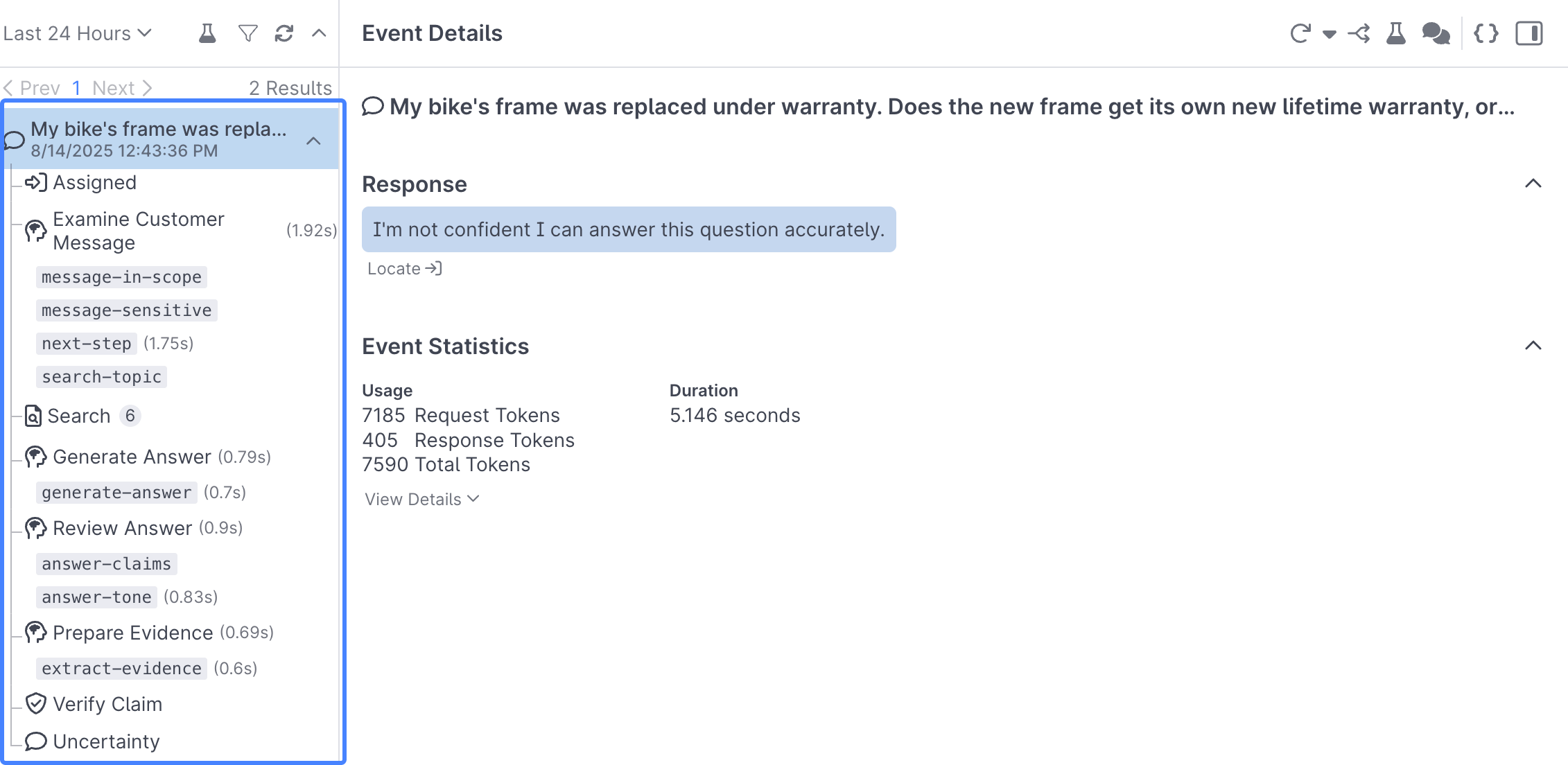

Now, open up the Debug Workbench and select your phrase, you'll notice there's a lot more going on before we generated an answer compared to Lesson 3:

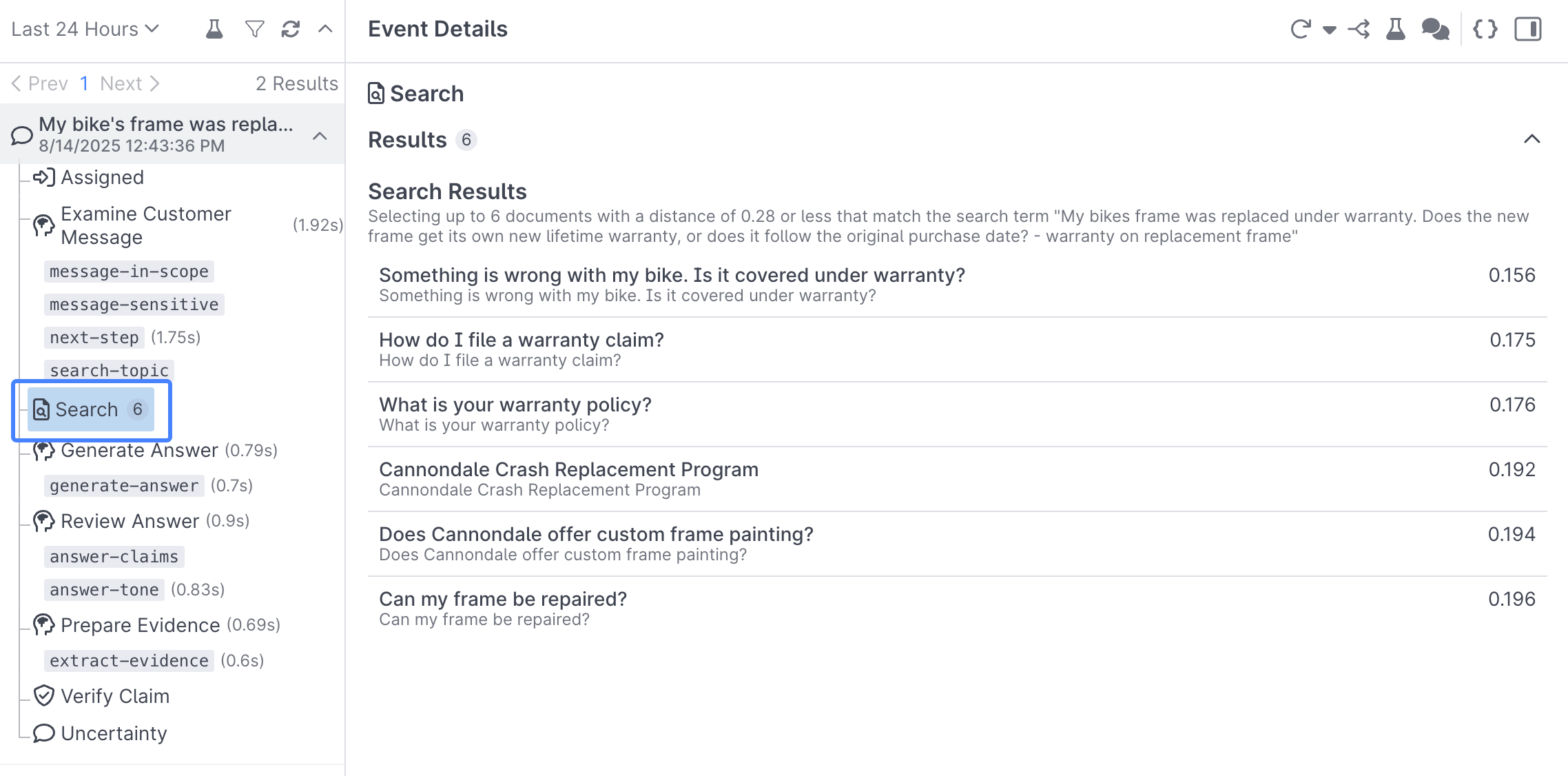

If you click into either Search or Generate Answer, you'll see similar documents used, and more or less the same completion that the assistant sent you in Lesson 3:

Post Generation Guardrails

In Lesson 3 we created a message-sensitive prompt, and tied it to our message-in-scope prompt to ensure we didn't respond to inquiries we didn't want our assistant to answer.

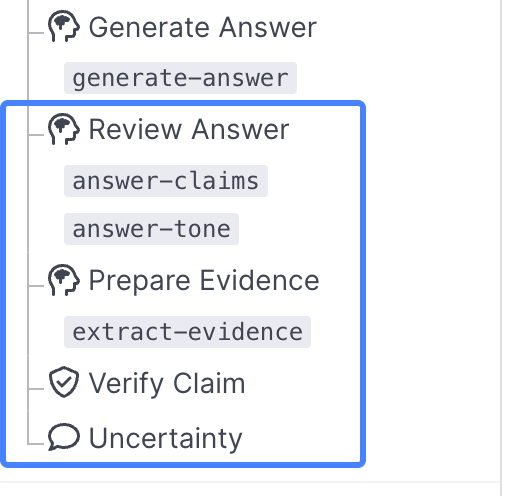

Now we can see a series of behaviors after the Generate Answer step that are designed to make sure we're only sending accurate and brand approved information:

answer-claims & answer-tone

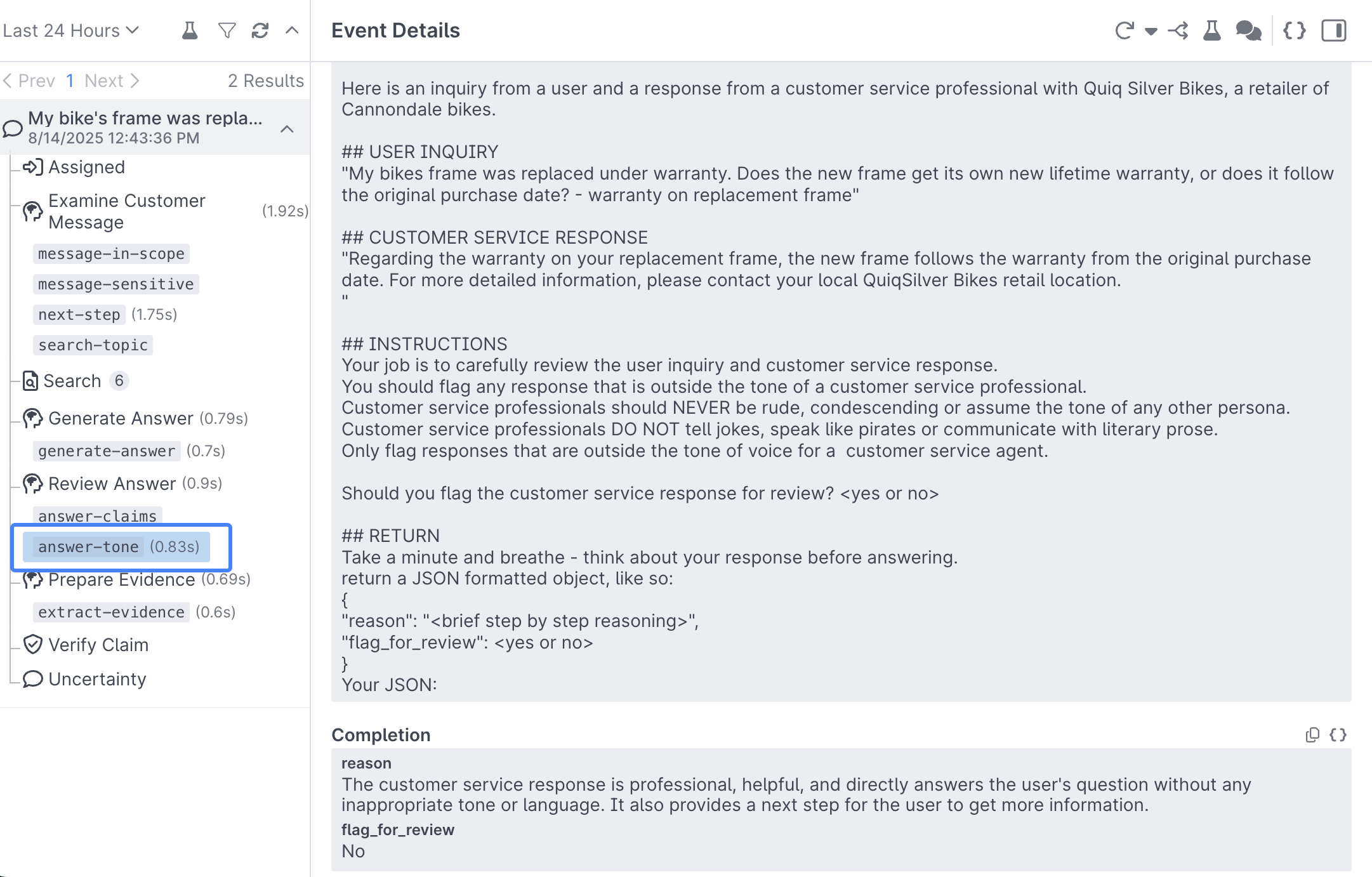

Select the answer-claims prompt and the answer-tone prompt and take note of what each are trying to do:

answer-claims is using an LLM to extract any factual claims in Generate Answer, and answer-tone is ensuring that the assistant is answering the question in a professional manner befitting Quiqsilver Mountain Bikes:

Preparing Evidence and Verifying Claims

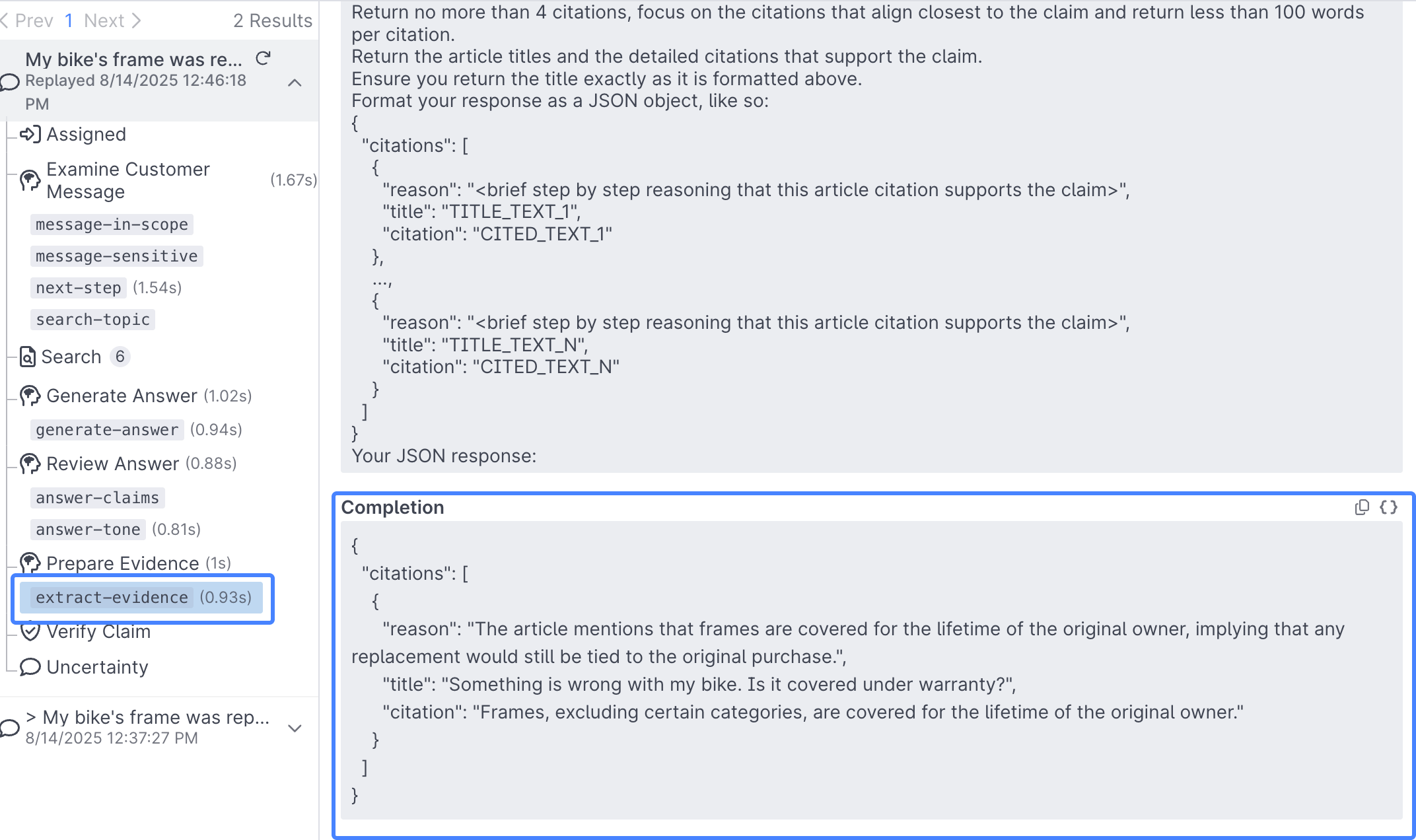

Next click into the Prepare Evidence behavior and read through the prompt; it's designed to identify any evidence within the articles found during Search that support the claim made by the assistant and prepare those as citations:

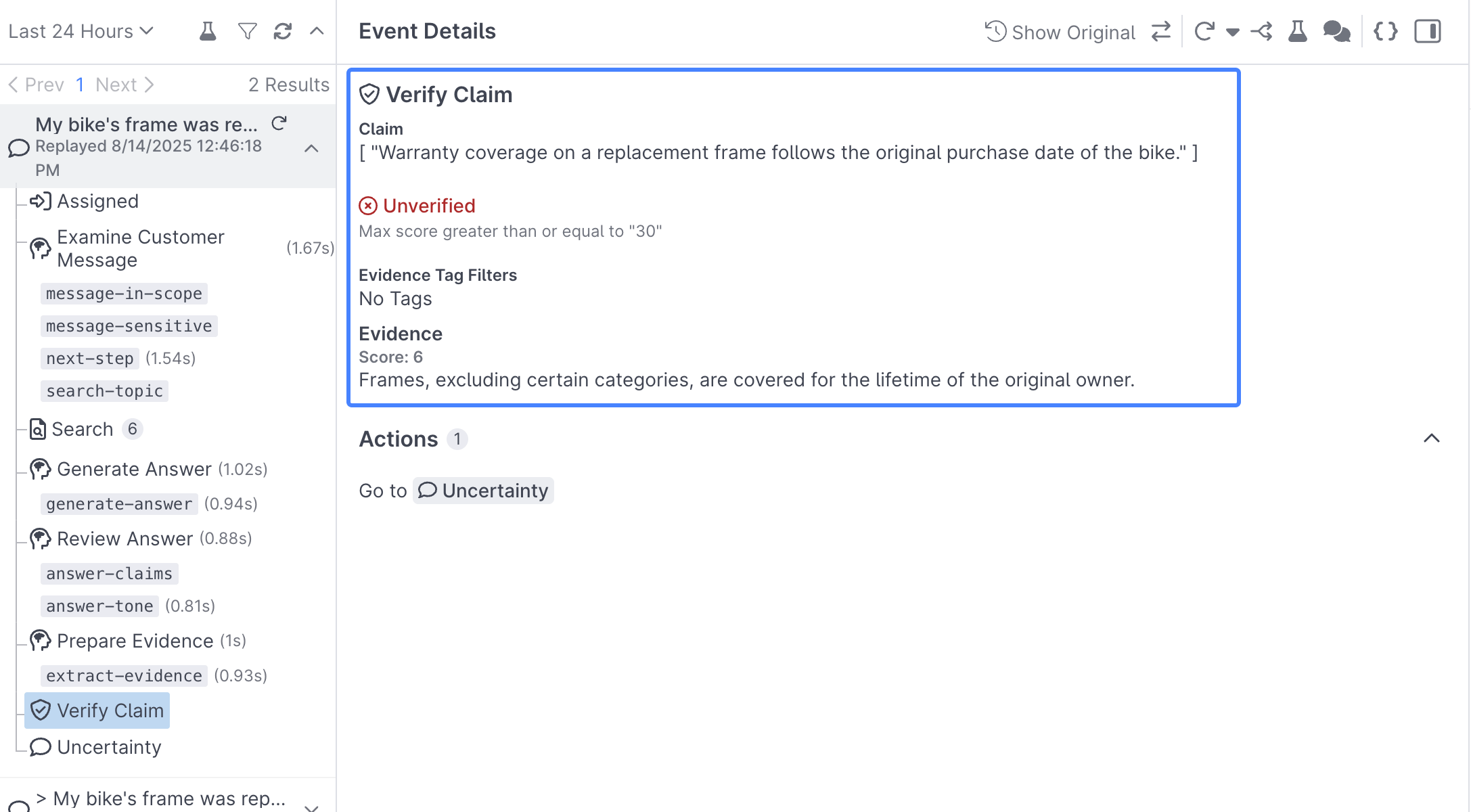

Next, select the Verify Claim behavior. This behavior looks at the citations provided in the previous step and determines whether or not the assistant has enough evidence to back up the answer it generated:

The Claim, Score, and Evidence provided by the Assistant. Yours will vary slightly from what's shown here.

In this case, you can see that there is not enough evidence to support the claim made by the assistant, so the generated answer is not sent and the assistant sends the Uncertainty response instead.

In a real world scenario you may want to offer the ability to speak to a human agent, but the important thing to take away is that you have full control under what conditions you consider a claim to be verified, and what you'd like to do in cases where the answer generated cannot be substantiated by the information provided.

The Verify Claim behavior doesn't use an LLM to generate the verification score, it's a purpose-built model designed specifically to detect hallucinations. Learn more

Key Concepts

Pre Answer Generation Guardrails

- Making upfront decisions about a customer's inquiry is a powerful technique for restraining AI. Throughout the lessons you've learned to leverage a range of different prompts in the Examine Customer Message behavior to route the conversation to the appropriate place in your flow.

Post Answer Generation Guardrails

- Ensuring the answer you send to your users is accurate, on tone, and helpful is as important as determining what inquiries to respond to in the first place. Post Answer Generation Guardrails, like those in the Review Answer and Prepare Evidence behaviors, are great ways to ensure that you're sending the right response to your users.

Verify Claim

- The Verify Claim behavior leverages a purpose built model (not an LLM) that's designed to detect hallucinations. When combined with the pre and post answer generation guardrails discussed above, as well as the ability to search for answers from AI Resources, you now have a strong foundation for generating accurate, on brand, and helpful answers for your users.

Whats Next

Congratulations, you finished the fourth lesson! You should have a pretty good understanding of how you can use AI Studio to create prompts that help ensure that you're generating on brand and accurate answers from a data source!

Learn how to upload and transform a document in AI Resources.

Learn how to build your own prompt chain and generate answers from a knowledge base.

Updated 4 months ago