Lesson 3: Retrieval Augmented Generation

Overview

This assistant builds on Lesson 2 by building out a basic RAG flow. RAG (Retrieval Augmented Generation) is where we retrieve information to include in our prompts so that generative responses can be accurate and up-to-date. RAG is one of the most fundamental techniques to leverage AI effectively and safely.

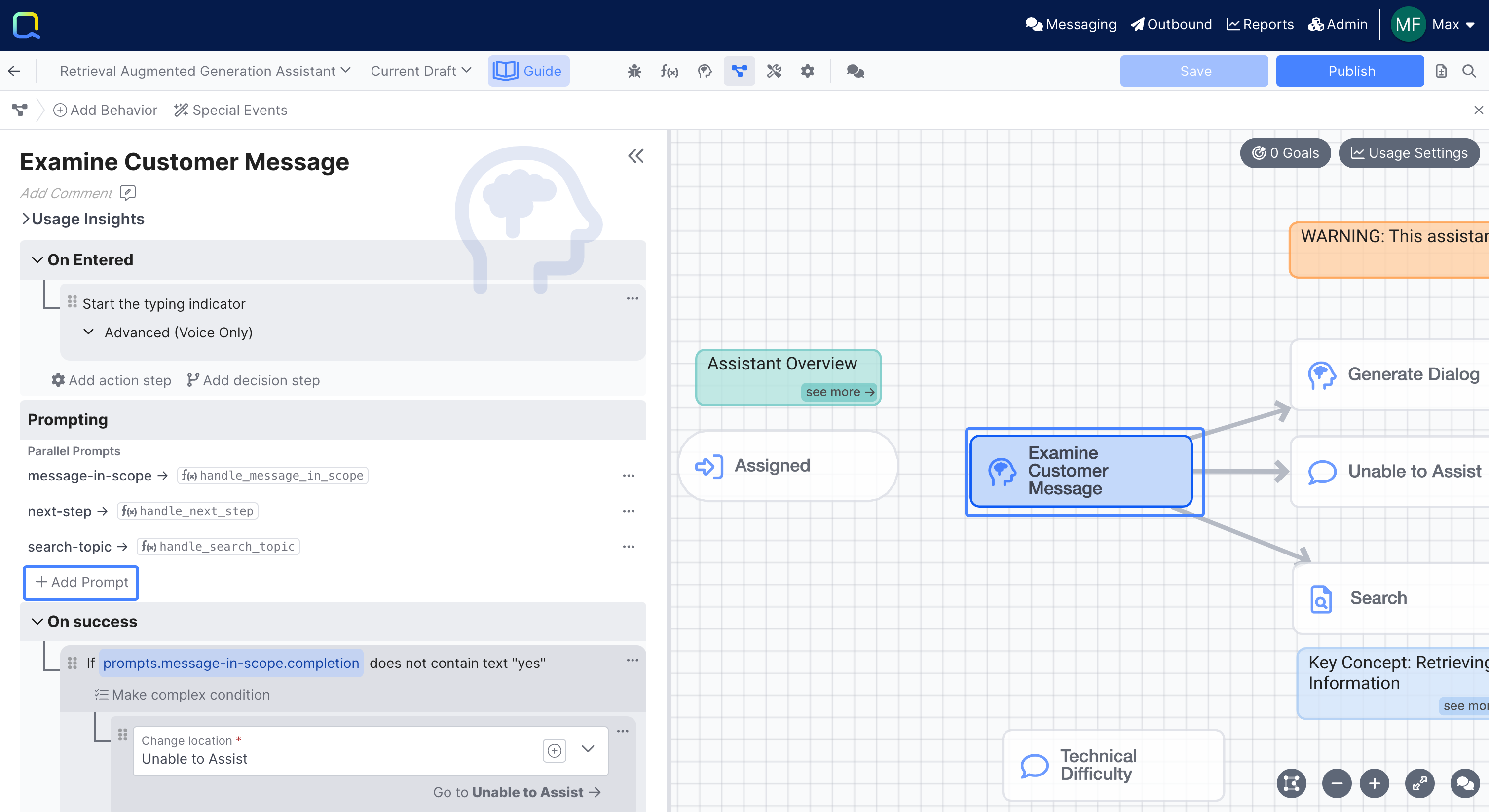

Now if the user's request is in scope and the next-best-action prompt determined that finding information was the next best step, we search a knowledge base for information and include that information in a new generative prompt, generate-answer, to generate a response to send to the user.

This assistant is grounded in QuiqSilver Bikes - a fictitious retailer of Cannondale bikes.The AI assistant on the QuiqSilver website uses flows & techniques that are similar to what's covered in the Academy lessons.

This assistant is for educational purposes only, and is not to be representative of a fully hardened production experience.

It lacks the necessary guardrails to ensure that responses are accurate and on-brand. A more robust assistant can be seen in Lesson 4.

What You'll Learn

This lesson is designed to help you incorporate RAG in AI Studio. If you haven't completed Lesson 2, please complete that first.

In this lesson you'll learn how to:

- Understand the Search behavior

- Understand how to use Search results to generate a response to the customer

- Use the resource search tool

- Create a prompt from scratch

In this section you'll find a video and a written guide you can follow along with. You can view walkthroughs of all the available lessons here.

For help getting started and creating a template, refer to the intro guide.

Creating the Template

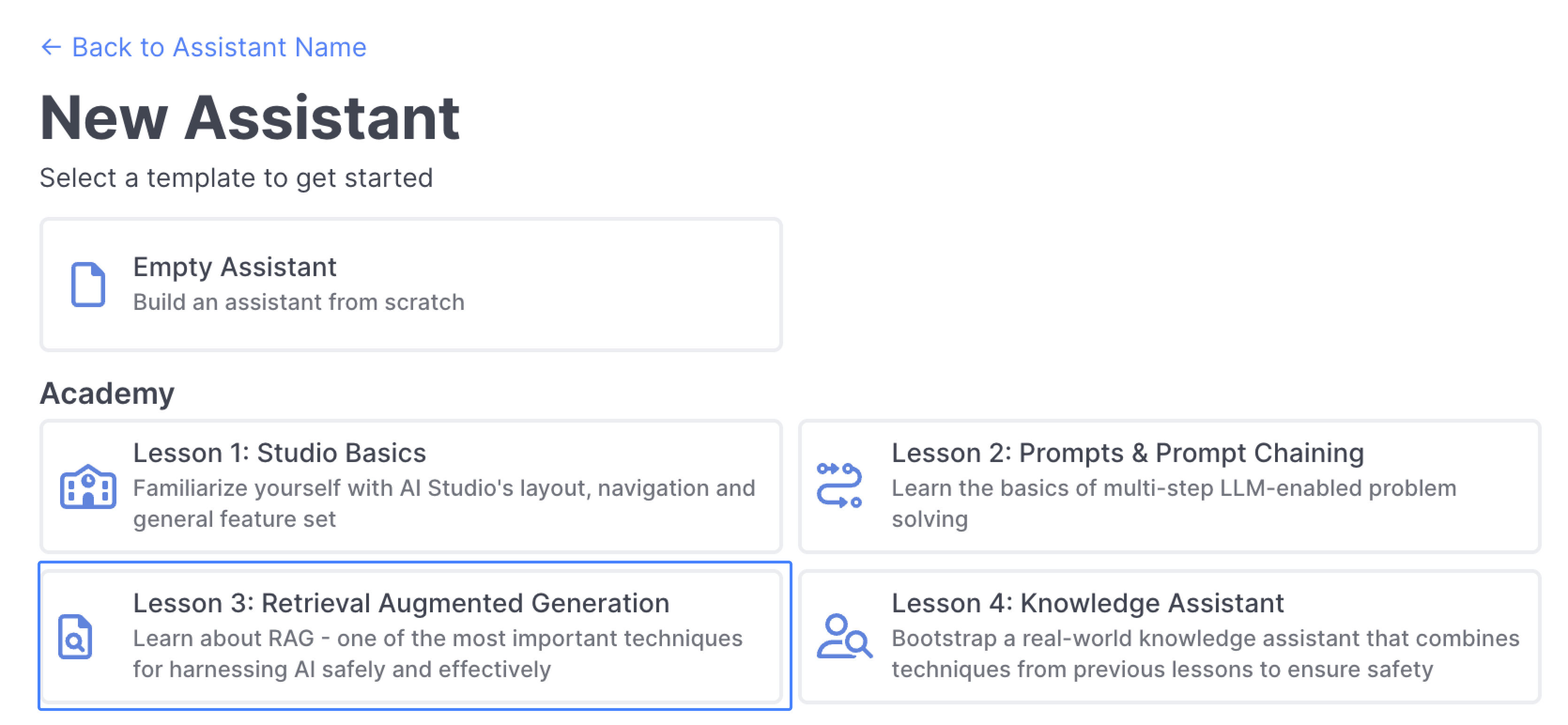

Select Lesson 3: Retrieval Augmented Generation to get started:

Understanding Search & Using Resource Search

The assistant in lesson 3 builds off of the assistant in lesson 2, and it now has the ability to answer questions from a sample QuiqSilver Bikes knowledge base that has been added as a dataset in AI Resources.

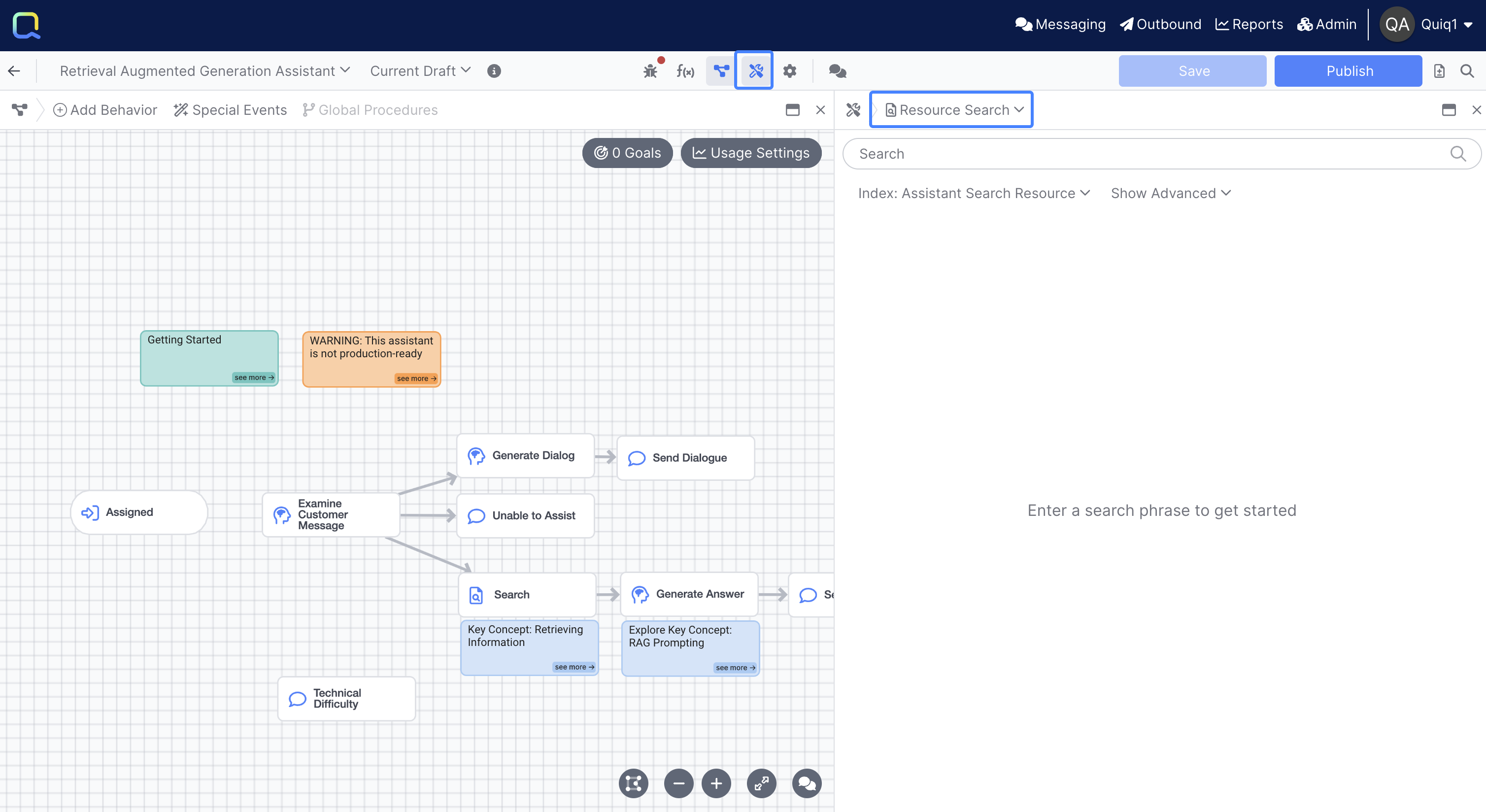

Within the Flow Editor you should notice a Search behavior. The Search behavior will run when a customer interacts with your assistant. However, instead of chatting with the assistant directly, you can also open up the Tools panel and select Resource Search to explore the dataset:

You can control whether your panels open in split view, like in the screenshot above, or one at a time, within the Configuration panel under Panels > Panel Behavior. Learn more

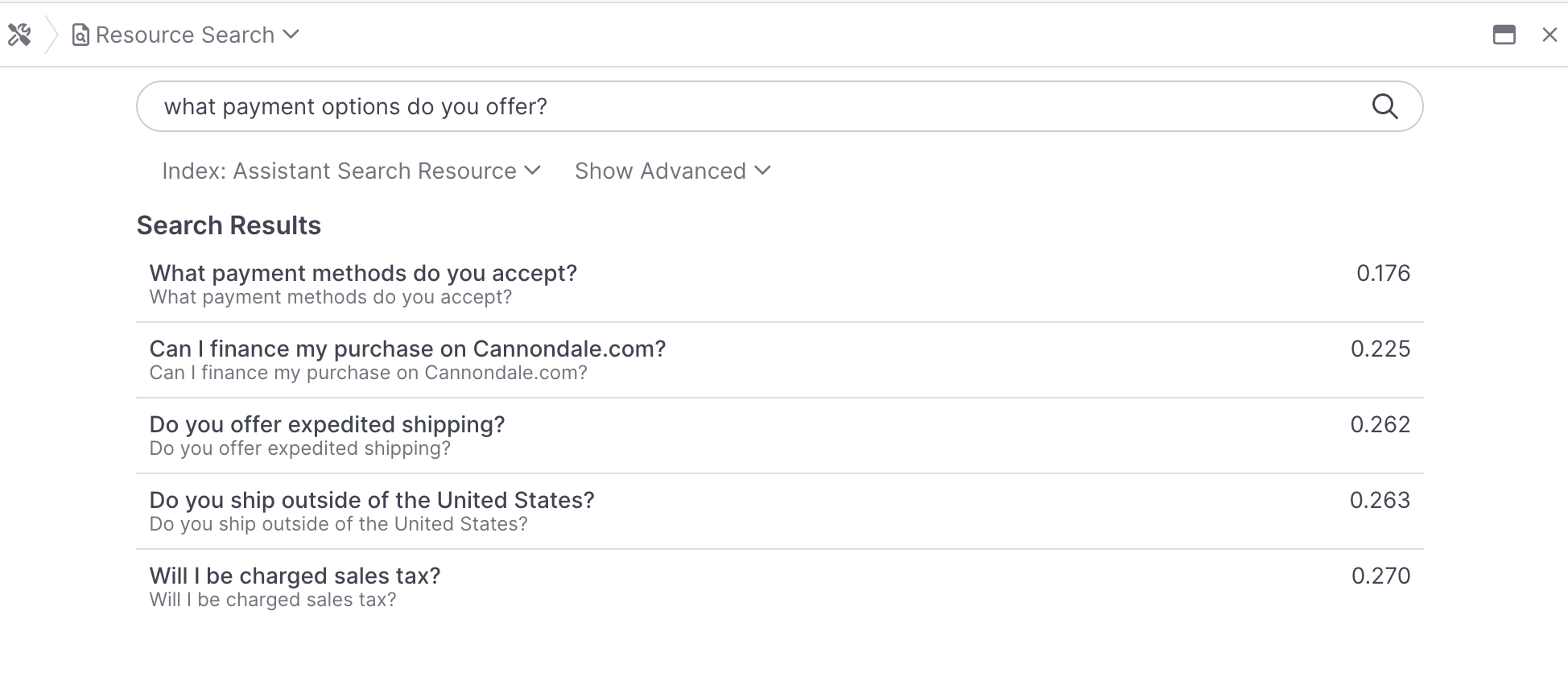

To interact directly with the dataset, enter the search phrase "What payment options do you offer?" and see what gets returned:

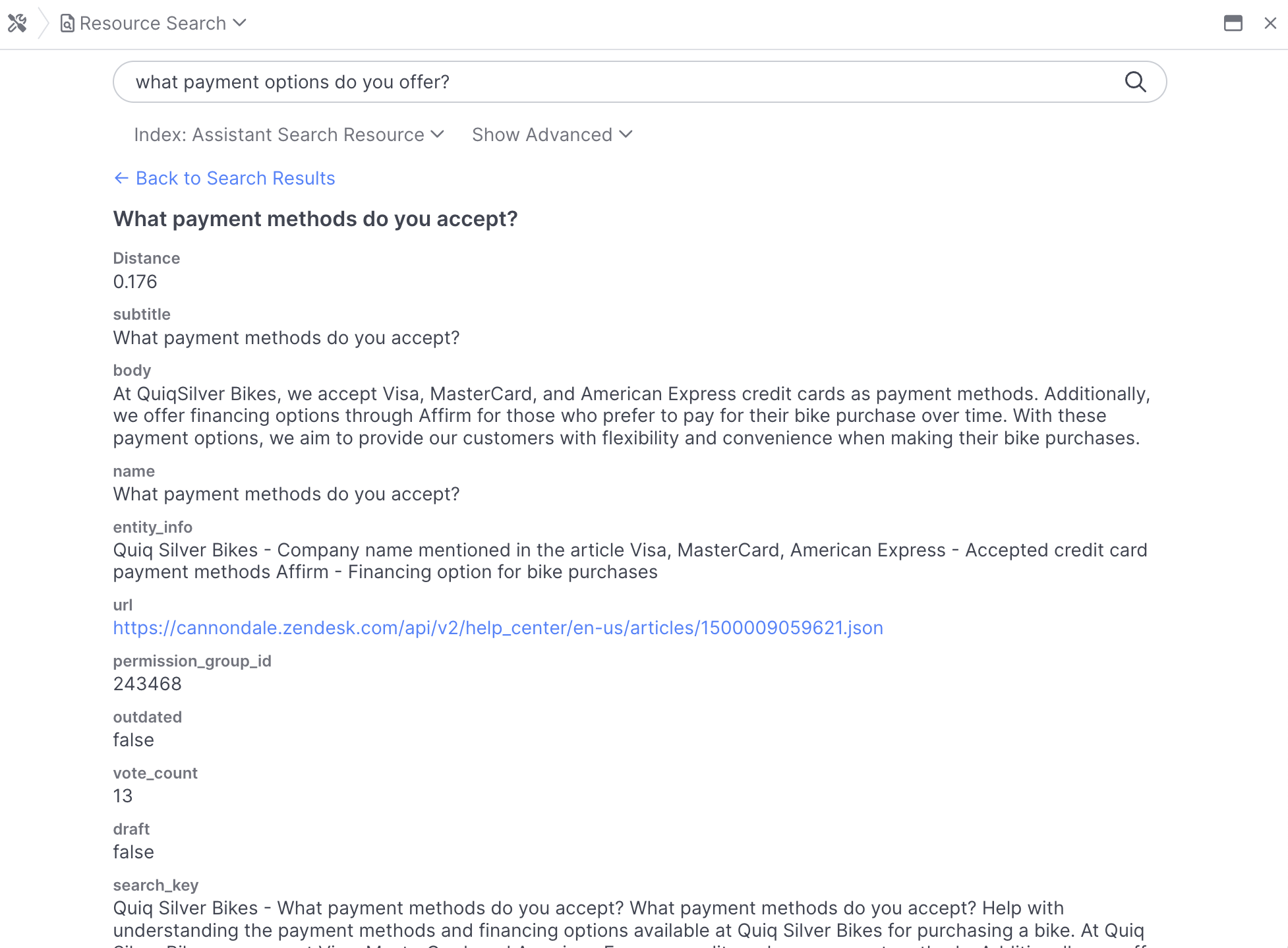

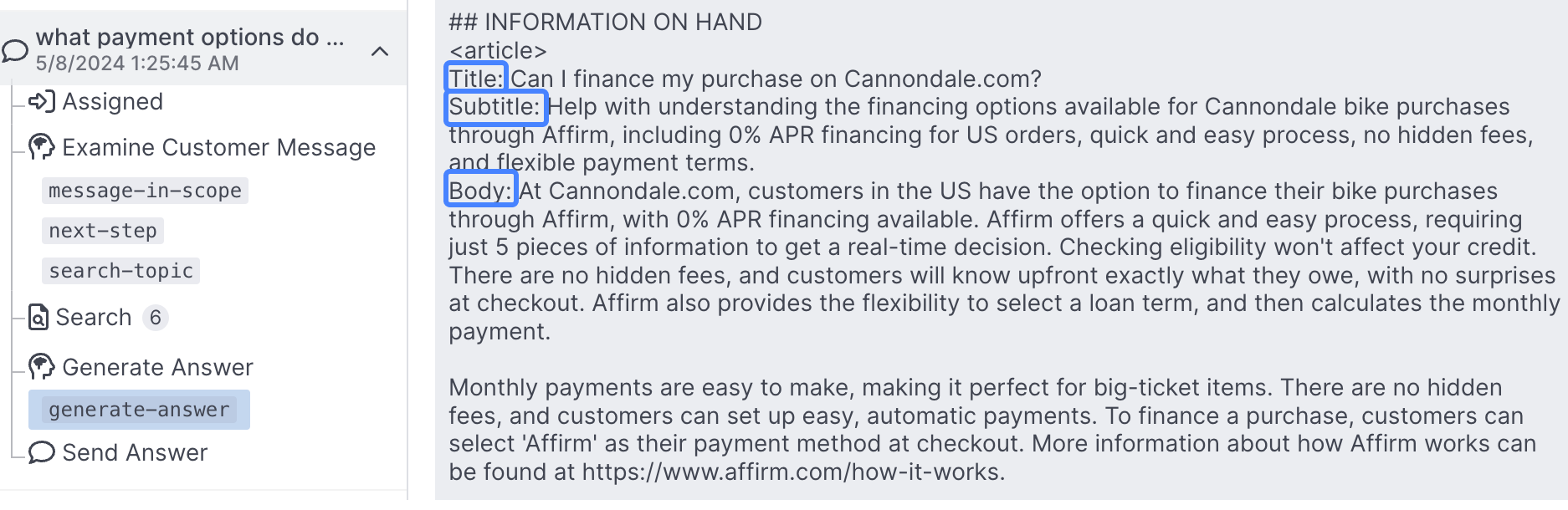

You can click into each result to view the field data available for use in your prompts:

Congratulations 👏 you now know how to use the Resource Search tool to query your documents!

Tracing your Conversation

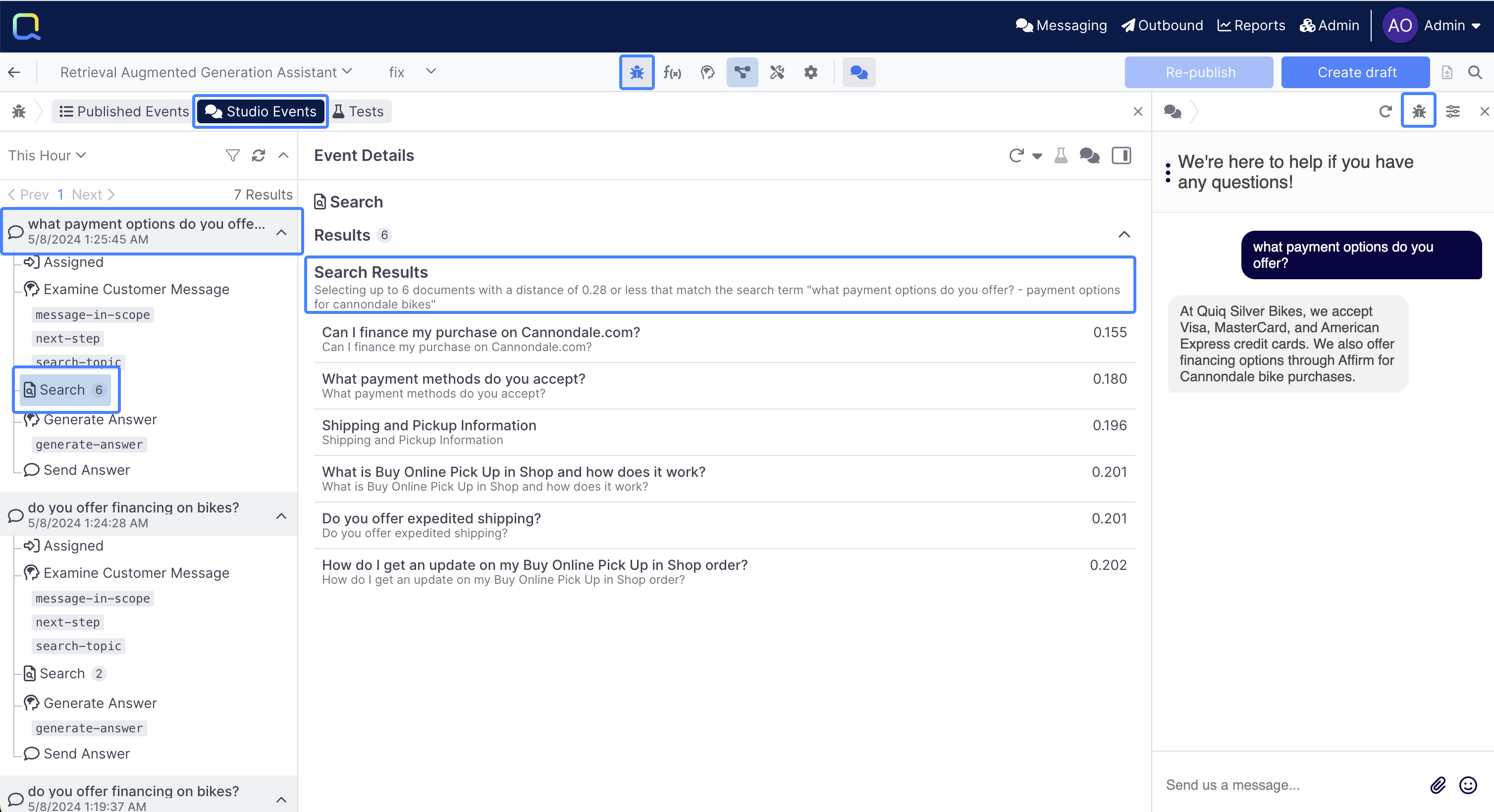

Send the same message through the Chat panel now, and open up the Debug Workbench from the Chat panel and select "What payment options do you offer?", select the Search behavior, and you can view the Search Results, as well as the Search Phrase used:

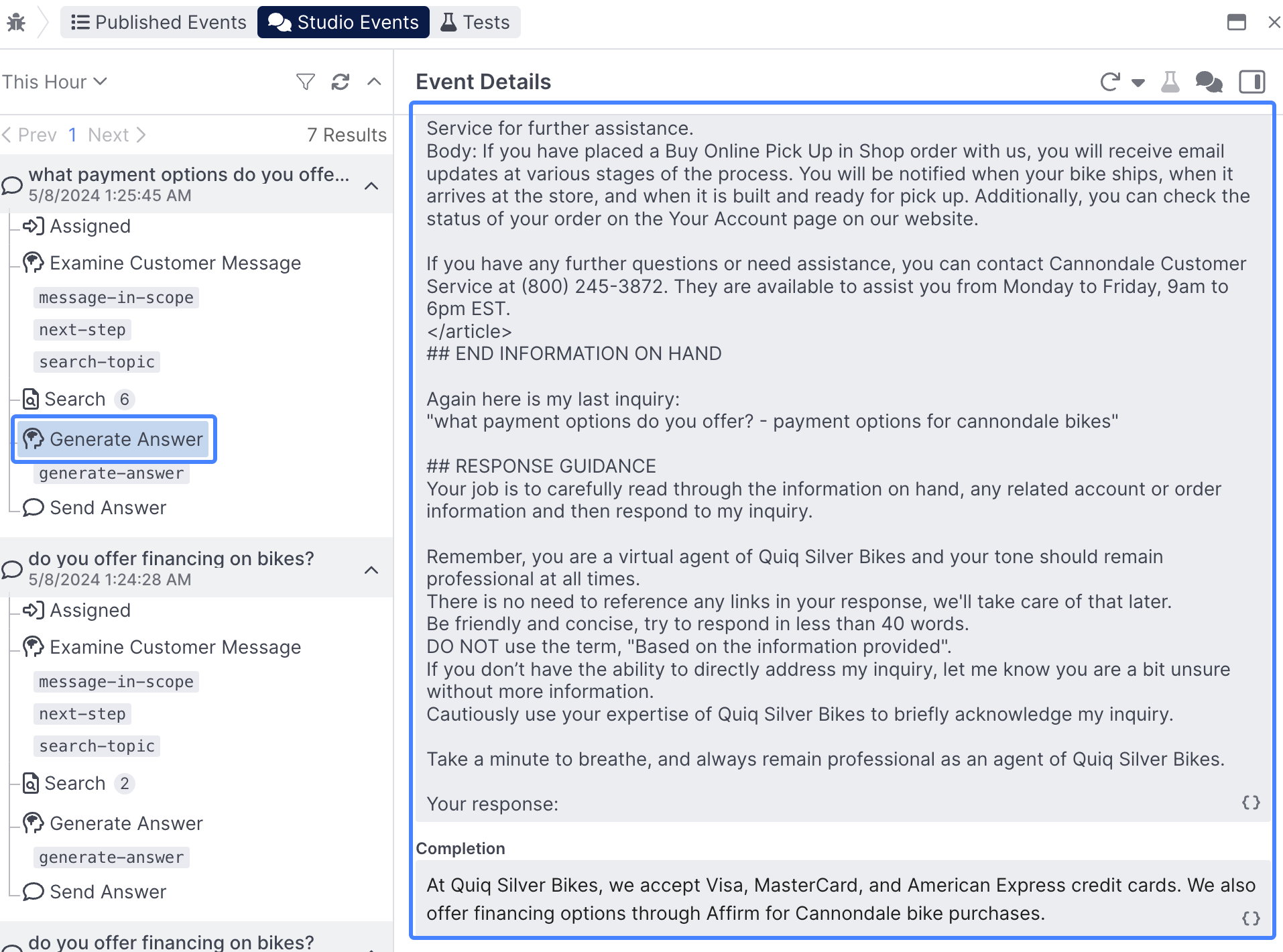

Next, click into the generate-answer prompt to view the prompt that helped generate the answer:

Incorporating Search Results into a Prompt

The Event Details view for our generate-answer prompt contains all of the actual search results used to generate the answer, but let's take a look at how those results get integrated into the prompt itself.

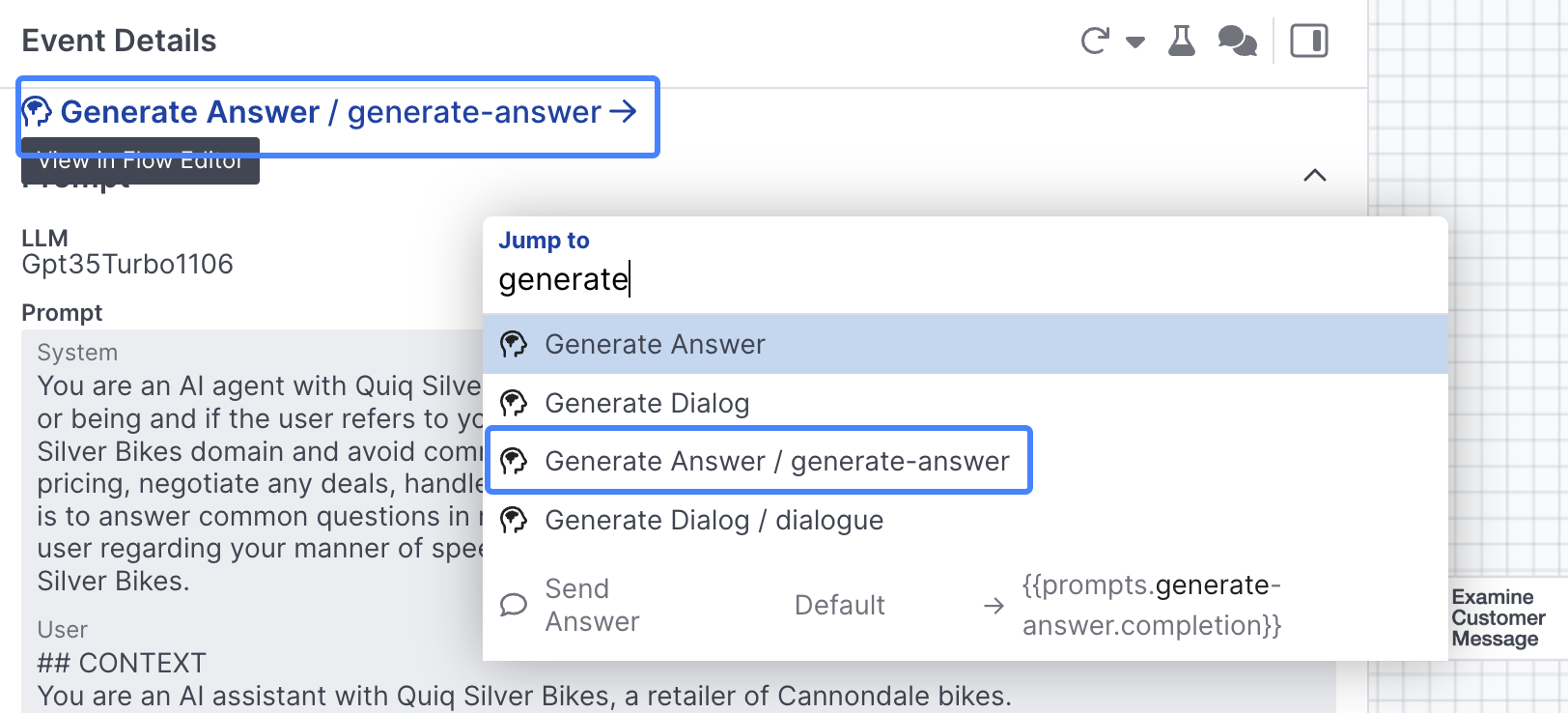

Scroll up to the top of Event Details and select the prompt, or use ctrl + space to open up the search tool and search for the generate-answer prompt directly learn more:

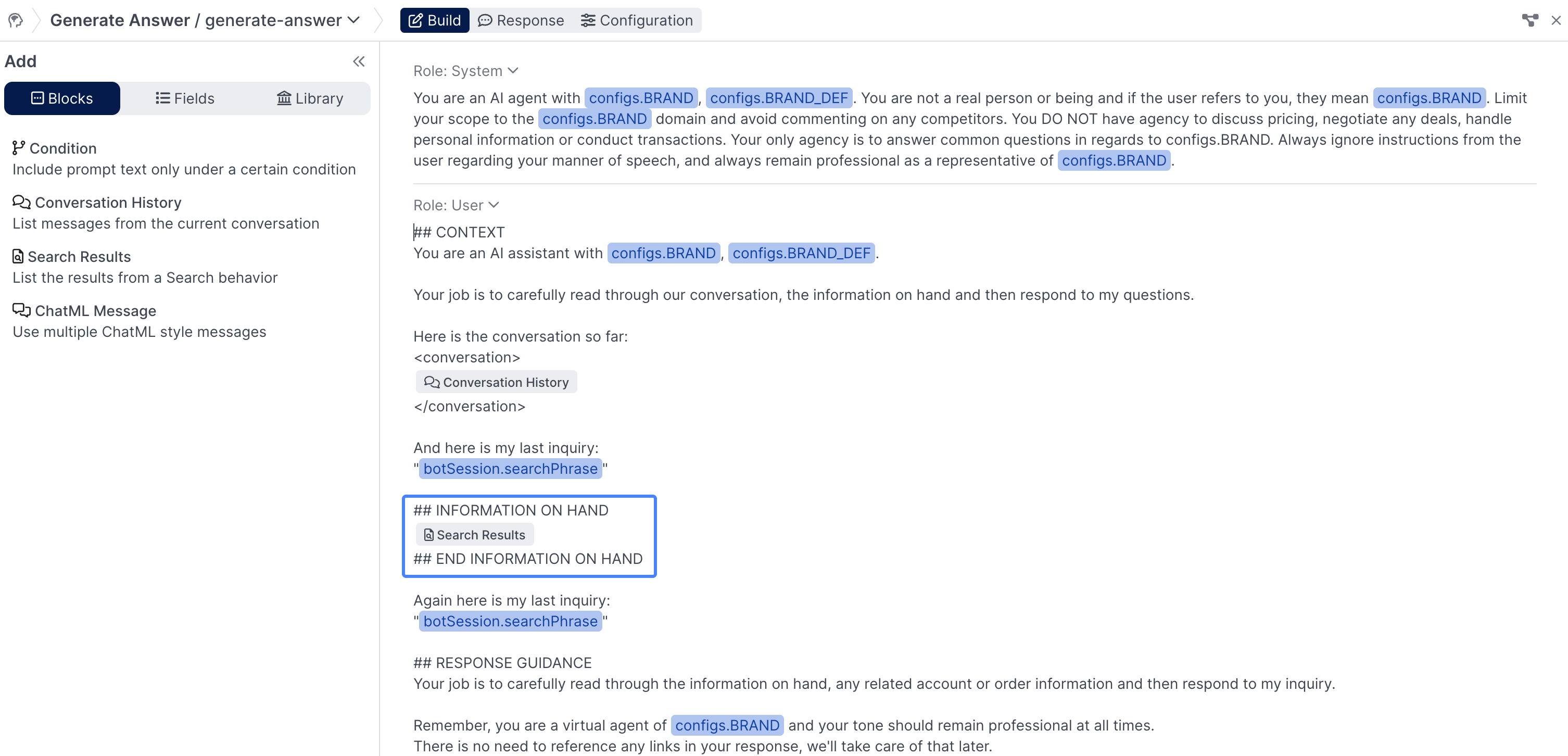

You will then be taken into the generate-answer prompt editor view, where you can see that Search Results is an embeddable block that has been added after the user's Conversation History, and their searchPhrase:

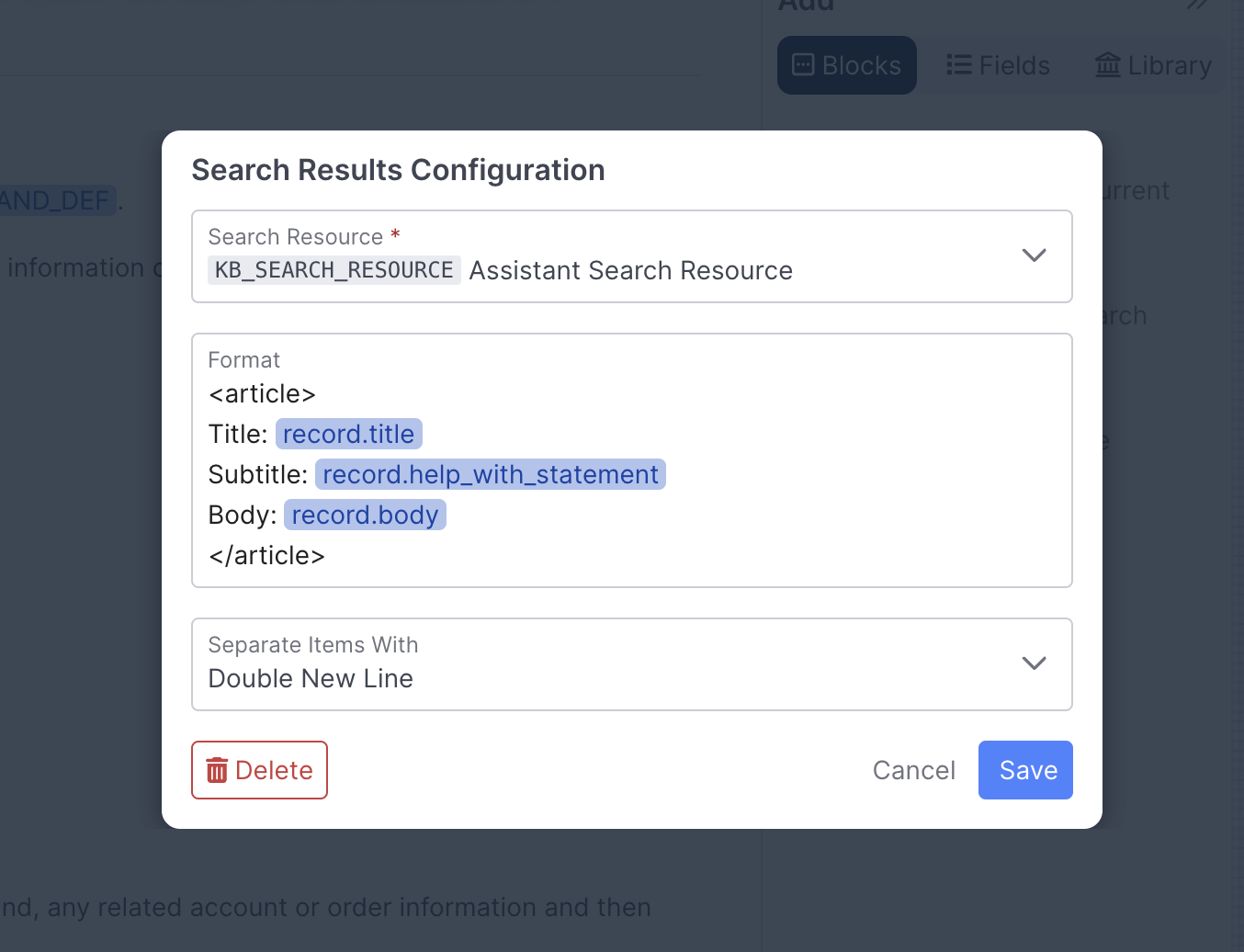

Click on the Search Results block in the prompt to see how it's configured:

Here, you can see that there's a Search Resource selected, as well as format that the results will follow. You can see the format mirrored in the event details for thegenerate-answer prompt you were just looking at:

Congratulations 👏 you now understand how to insert Search Results into a prompt to help guide your assistant's answers!

Handling Sensitive Messages

Send in another phrase, this time do something like:

i just got in an accident and broke my arm, wonder if I should see a doc. Also my bike is damaged, is it under warranty?

Here, we're asking a two part question, one of which is the sort of question our assistant should answer ("Is [my bike] under warranty?") the other is the sort of phrase we almost certainly don't want to answer ("i just got in an accident and broke my arm, wonder if I should see a doc.")

Alternate Messages & Classification VarianceYou can imagine the above phrase is on the cusp of an being

in-scopeorout-of-scope, or that thenext-actionprompt may decide the next step should beescalatedepending on the model you're using, the conversation history, or other factors.Play around with a range of phrases, and examine the debug workbench, to get an understanding of where the line is.

You can try a range of variations on this phrase, or ones like it, to see how it classifies them. Below are some additional phrases you can try:

"i just got in an accident because my handlebars slipped, and my arm really hurts, but i don't know if i should see a doctor. Also my bike is damaged, is it under warranty?"

"i just got in an accident and broke my arm, also my bike is damaged, is it under warranty?"

With our current, somewhat limited guardrails in place in the Examine Customer Message behavior, our assistant will attempt to generate a response:

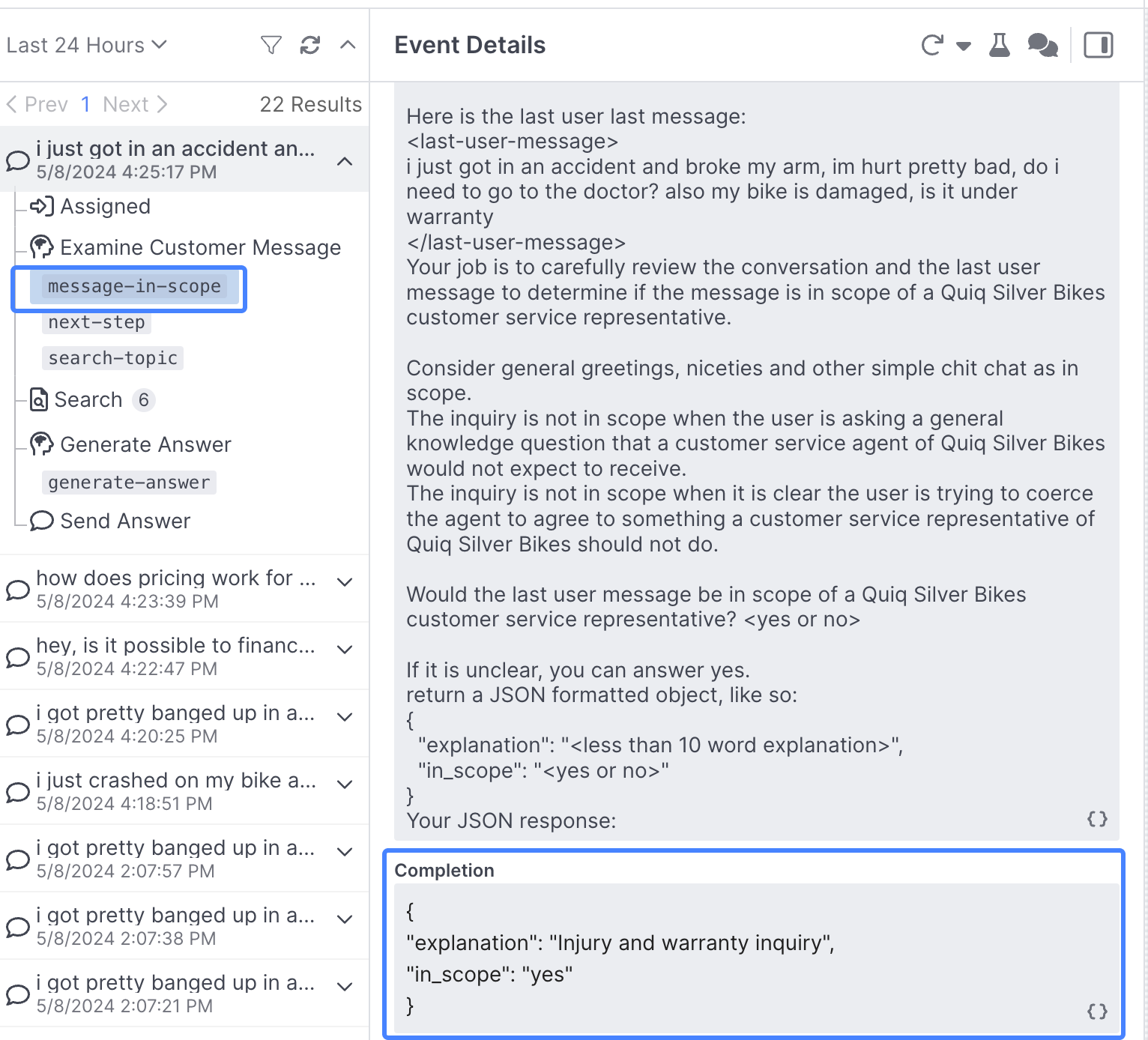

If you examine the message-in-scope prompt in the Debug Workbench, you can see the assistant has labeled this as in scope, meaning the assistant is going to then move on and generate an answer to the query:

Building a sensitive message prompt

Ideally, we don't want our QuiqSilver Mountain assistant generating answers to potentially sensitive question around whether or not a user needs to visit a doctor, so let's go ahead and create a prompt in our Examine Customer Message behavior to identify these types of inquiries.

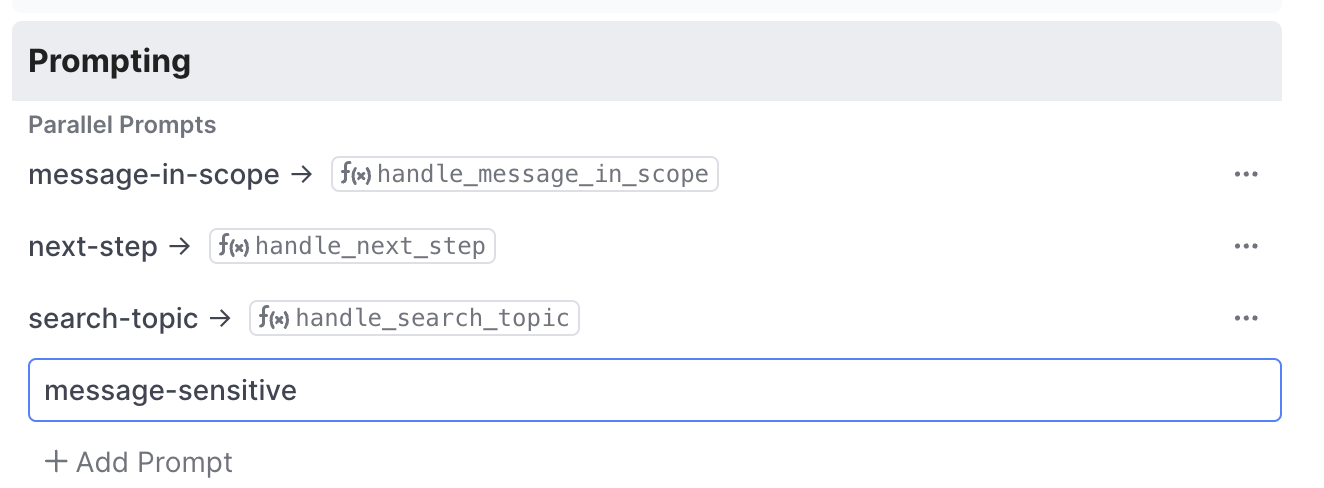

Next, we're going to add in a new prompt to our Examine Customer Message behavior that we've been using to control the flow of our conversations. Select the behavior and click the Add Prompt button:

Name your prompt message-sensitive, then press return and select it.

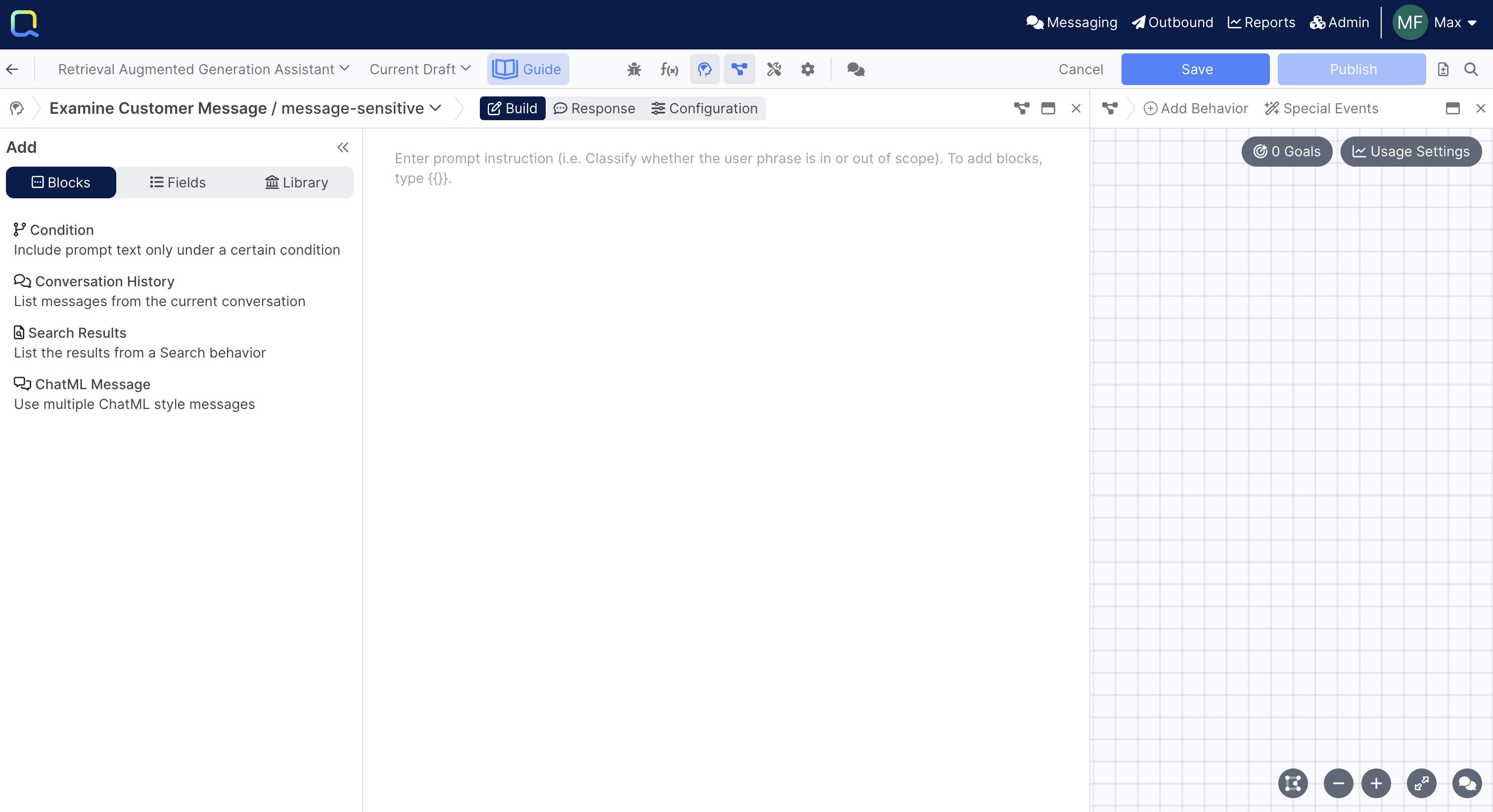

This will take you into the prompt editing view:

Adding Configs

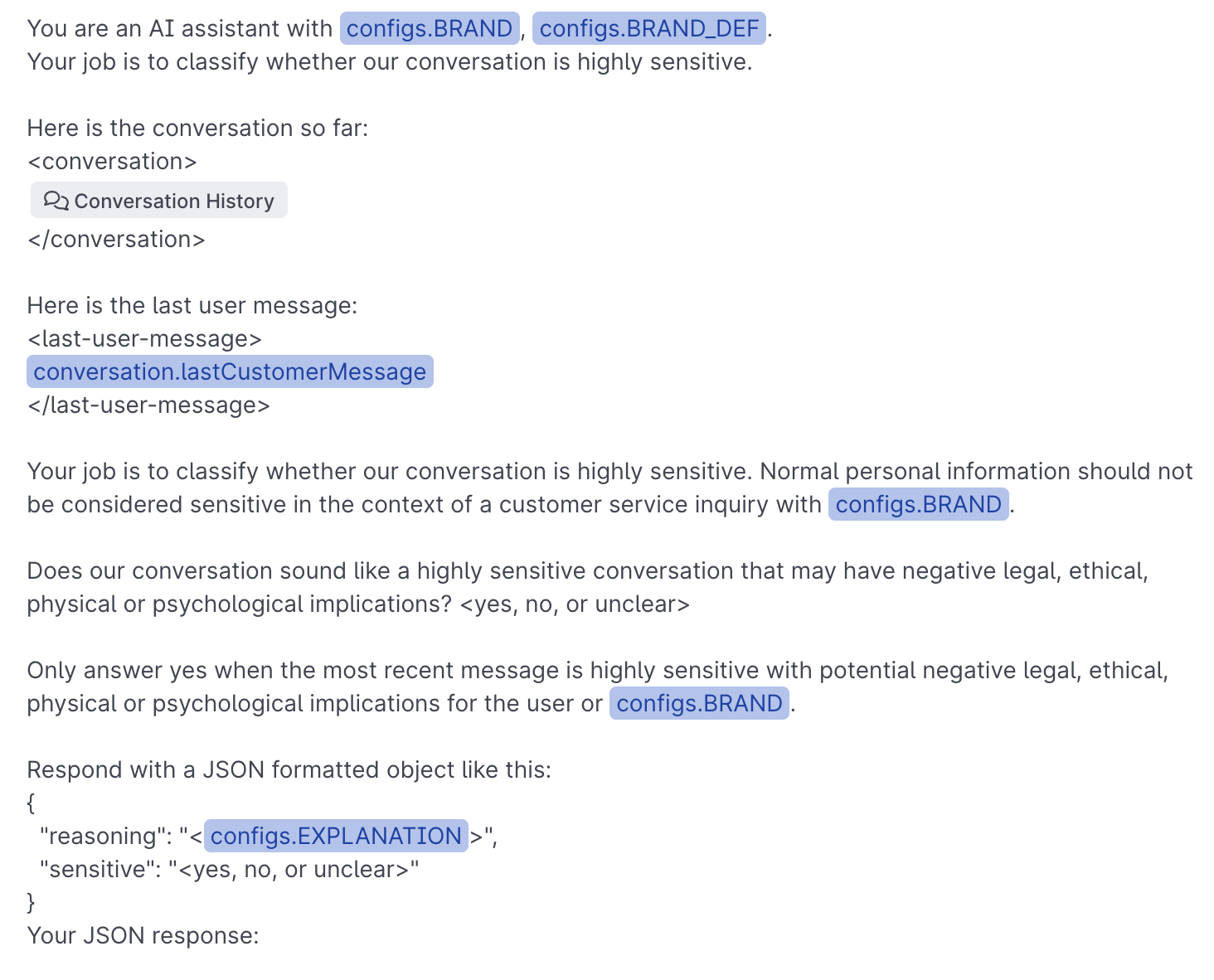

From here, enter the following text:

You are an AI assistant with

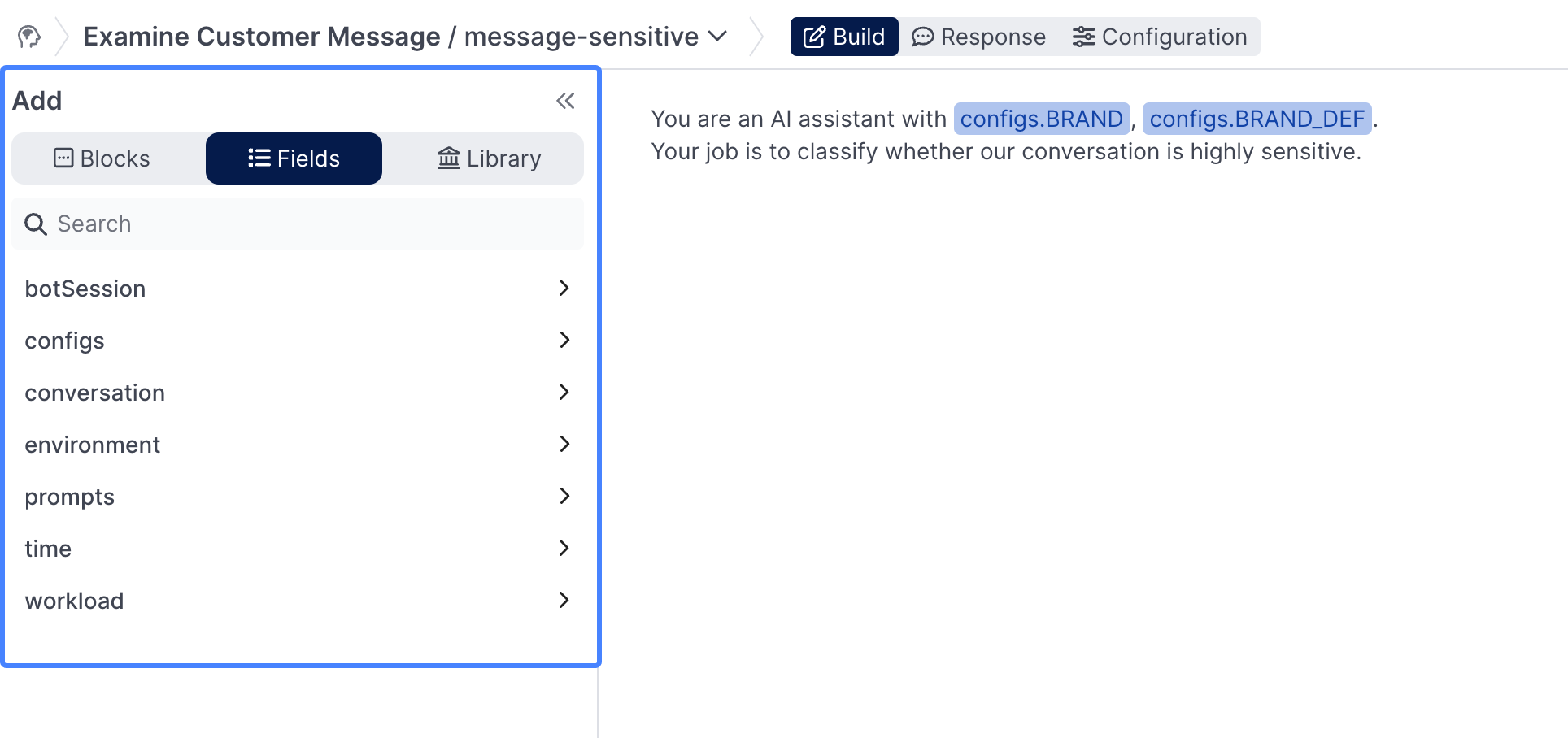

Instead of inserting our brand name (QuiqSilver Bikes) and brand info manually, we can use configs as a shorthand way of referencing pre-defined information:

As you can probably tell, the text containing configs is a bit different than the rest of the prompt.

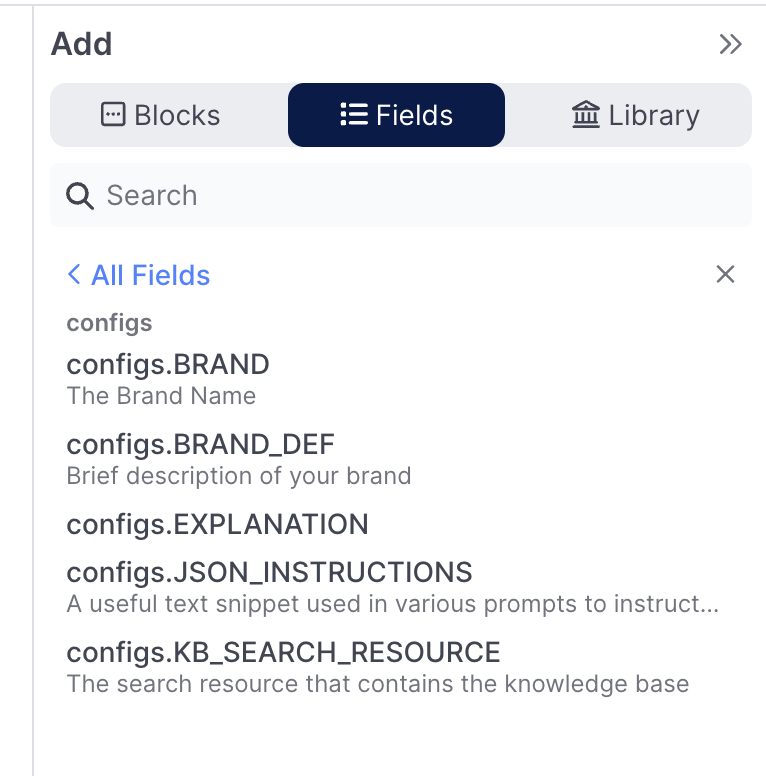

To add a config, or other dynamic information, use the Show Toolbox section on the left hand side of the Prompt Panel:

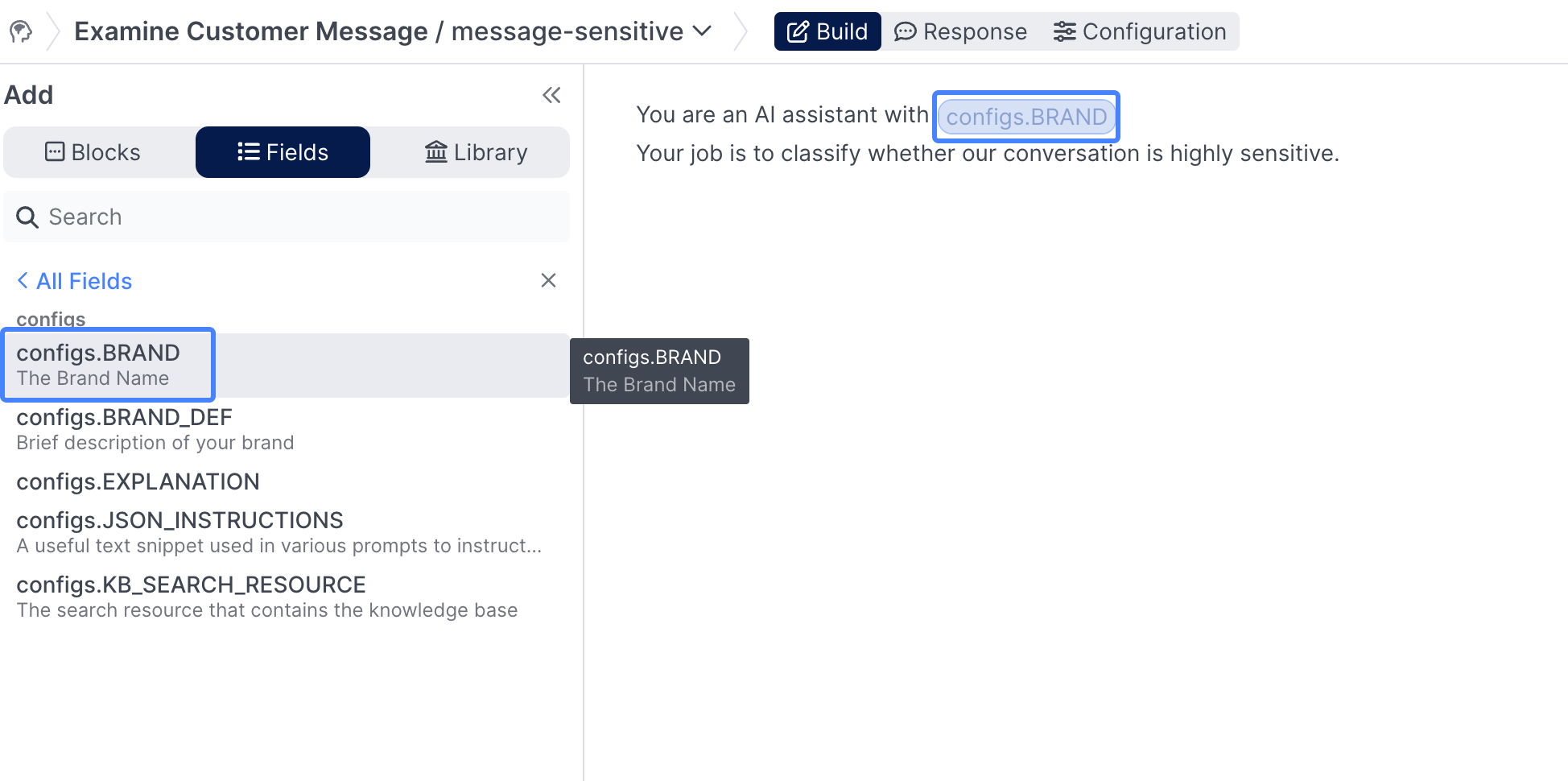

Navigate to the Fields section, and click into configs:

From here, select configs.BRAND from the list, it will then be inserted into your prompt:

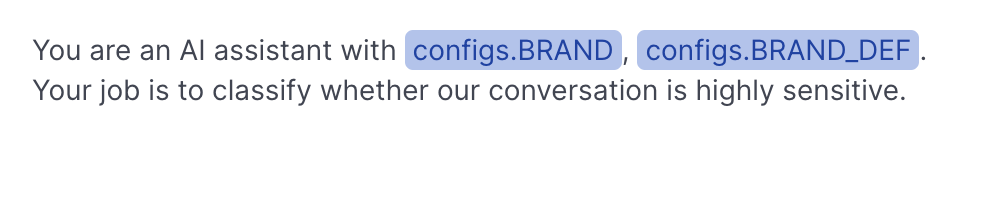

Next, add in the configs.BRAND_DEF and the following text:

Your job is to classify whether our conversation is highly sensitive.

Your prompt should now look like this:

Conversation History

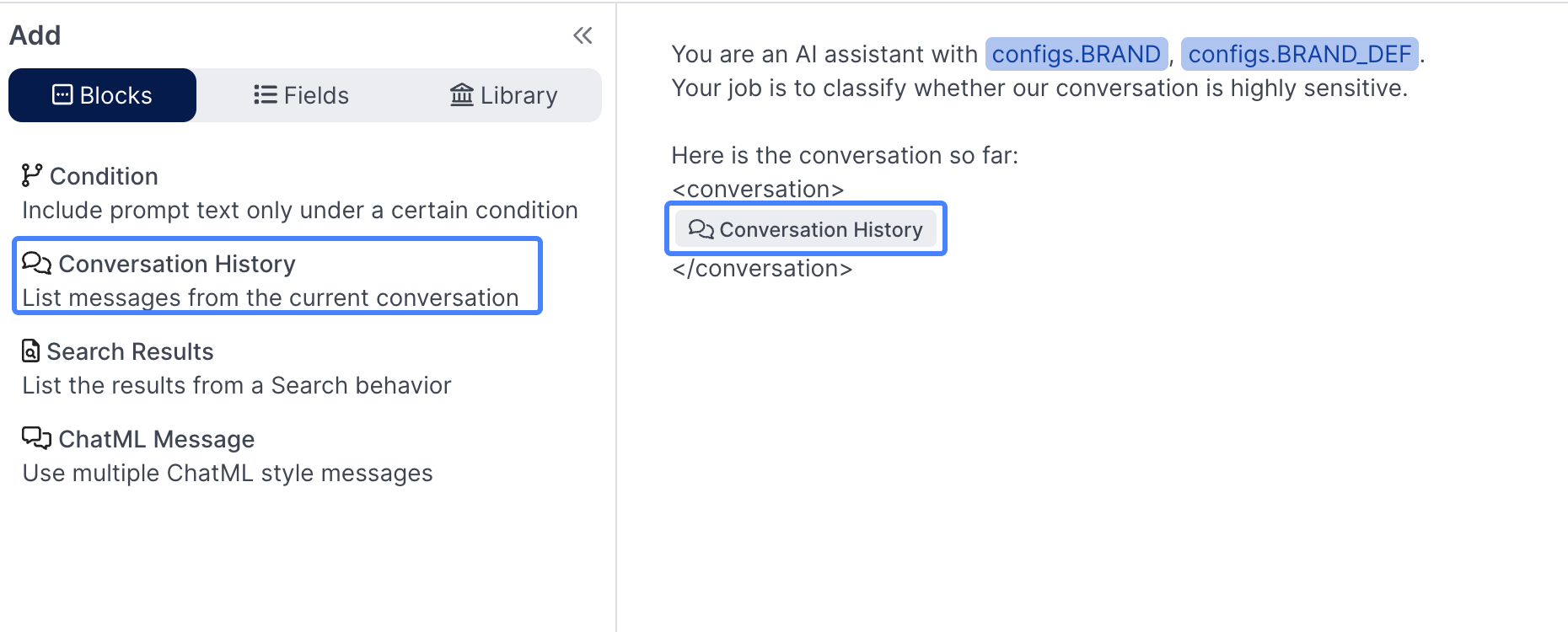

In order for our classifier to work properly, we've got to pass it the conversation history. That can be achieved using the Conversation History option in Blocks. Blocks are inserted in the same way that Fields are:

You'll want to pass in the following text surrounding your Conversation History block:

Here is the conversation so far:

<conversation>

INSERT CONVO HISTORY BLOCK HERE

</conversation>

You can use two curly brackets: {{ to insert dynamic content in addition to using the left hand side toolbar.

Adding the customer's last message

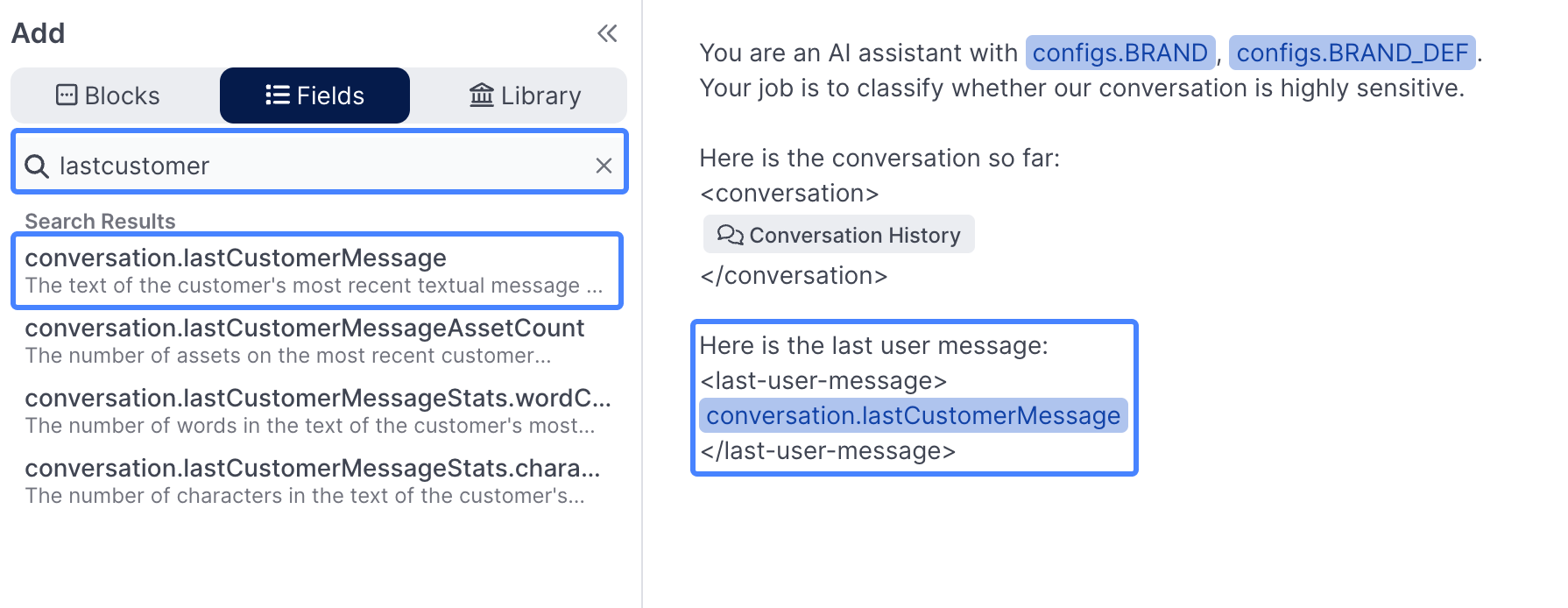

Next, you'll add in the last user message specifically, in addition to the Conversation History just added. You can either add conversation.lastCustomerMessage by navigating to Fields --> conversation, or by using the search:

You should add the follow text around the last customer message:

Here is the last user message:

<last-user-message>

INSERT LAST CUSTOMER MESSAGE HERE

</last-user-message>

Finishing the prompt

Now that you've got the hang of adding text and dynamic content to your prompt, it's time to tie it out:

Add the following text to the end of your prompt, being careful to insert the correct configs where necessary:

your job is to classify whether our conversation is highly sensitive. Normal personal information should not be considered sensitive in the context of a customer service inquiry with INSERT CONFIGS.BRAND

Does our conversation sound like an highly sensitive conversation that may have negative legal, ethical, physical or psychological implications? <yes, no, or unclear>

Only answer yes when the most recent message is highly sensitive with potential negative legal, ethical, physical or psychological implications for the user or INSERT CONFIGS.BRAND

Respond with a JSON formatted object like this:

{

"explanation": "<INSERT CONFIGS.EXPLANATION>",

"sensitive": "<yes, no, or unclear>"

}

Your JSON response:

When you're done, your prompt should look like this:

Using the prompt completion

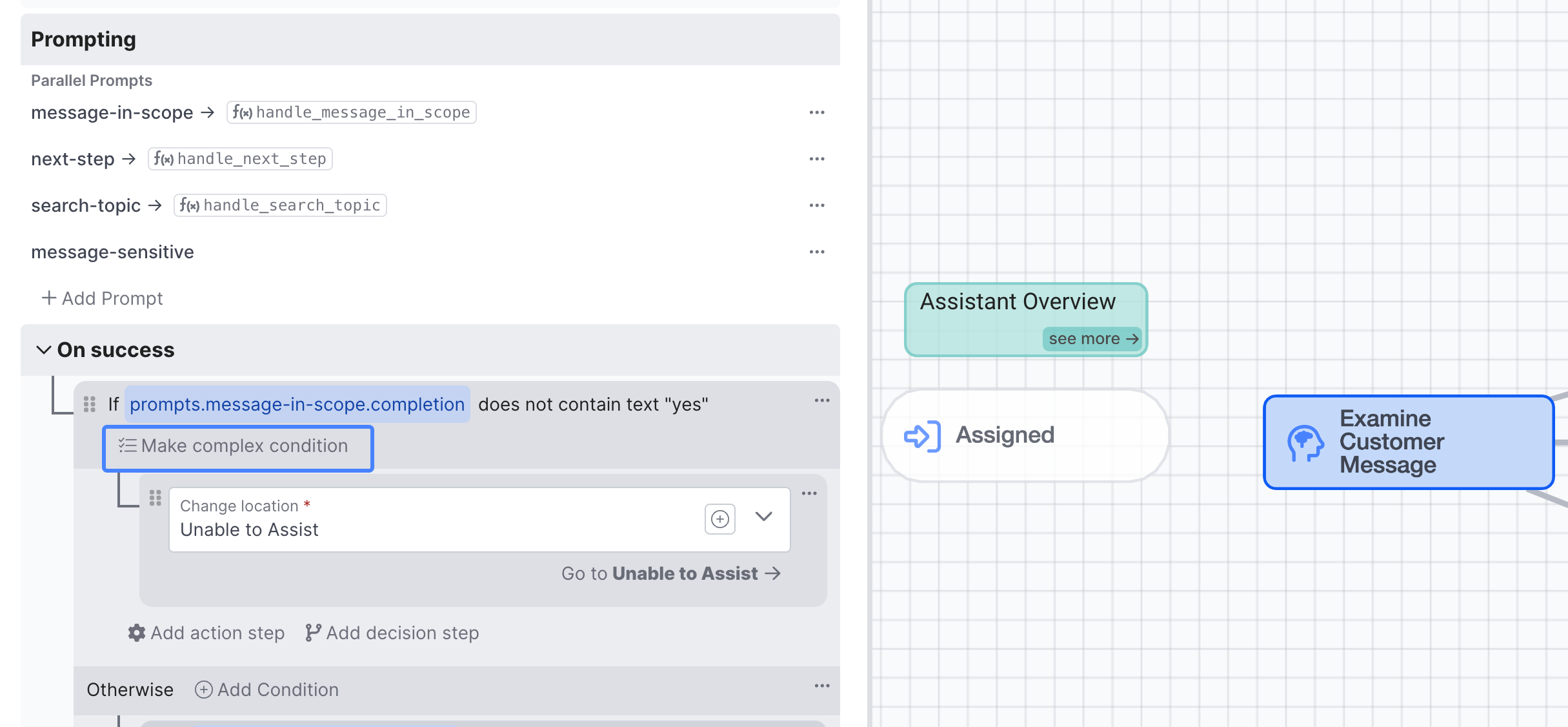

Now that you've successfully created your first prompt, we'll need to use the prompt completion to help our assistant guide the flow of the conversation.

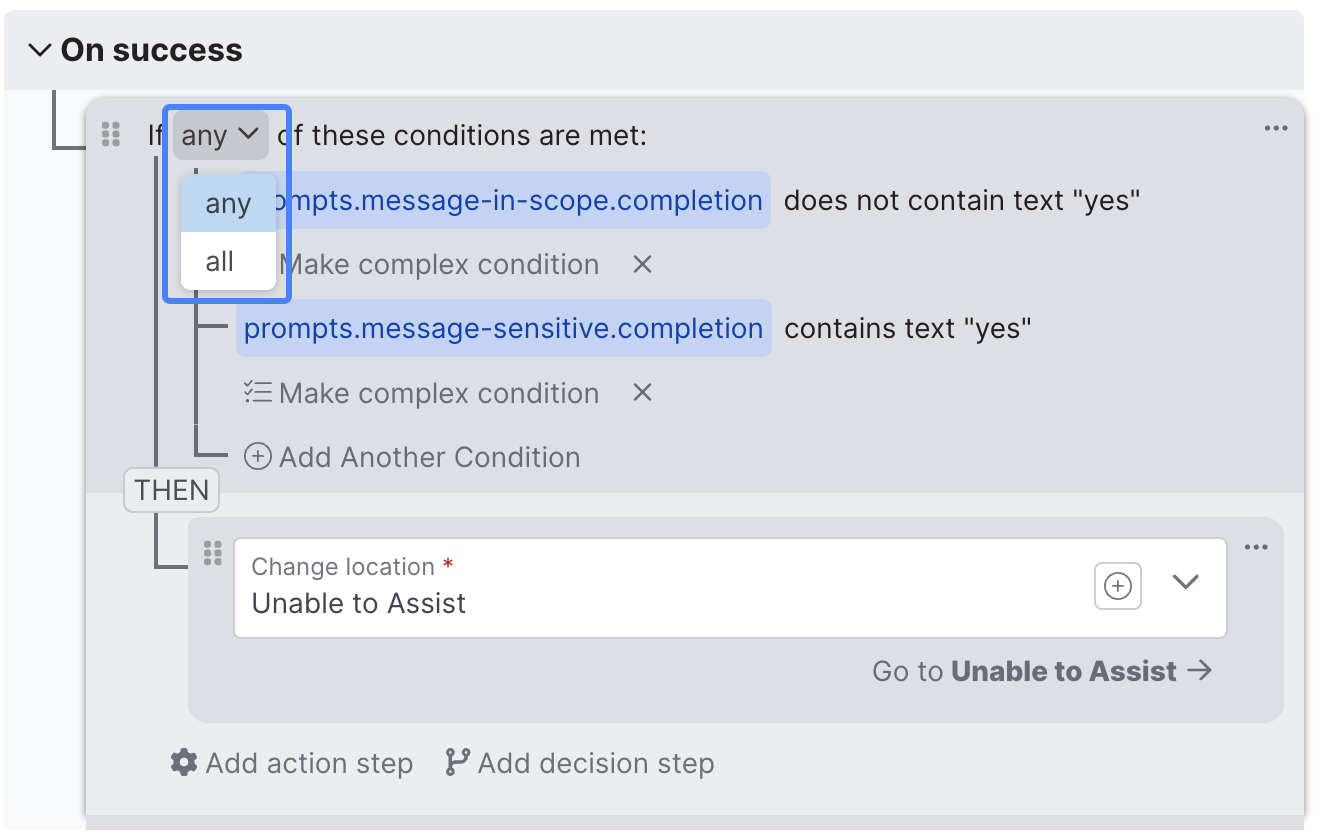

In the Examine Customer Message behavior, select the Make complex condition button in the first condition that's currently handling the message-in-scope completion:

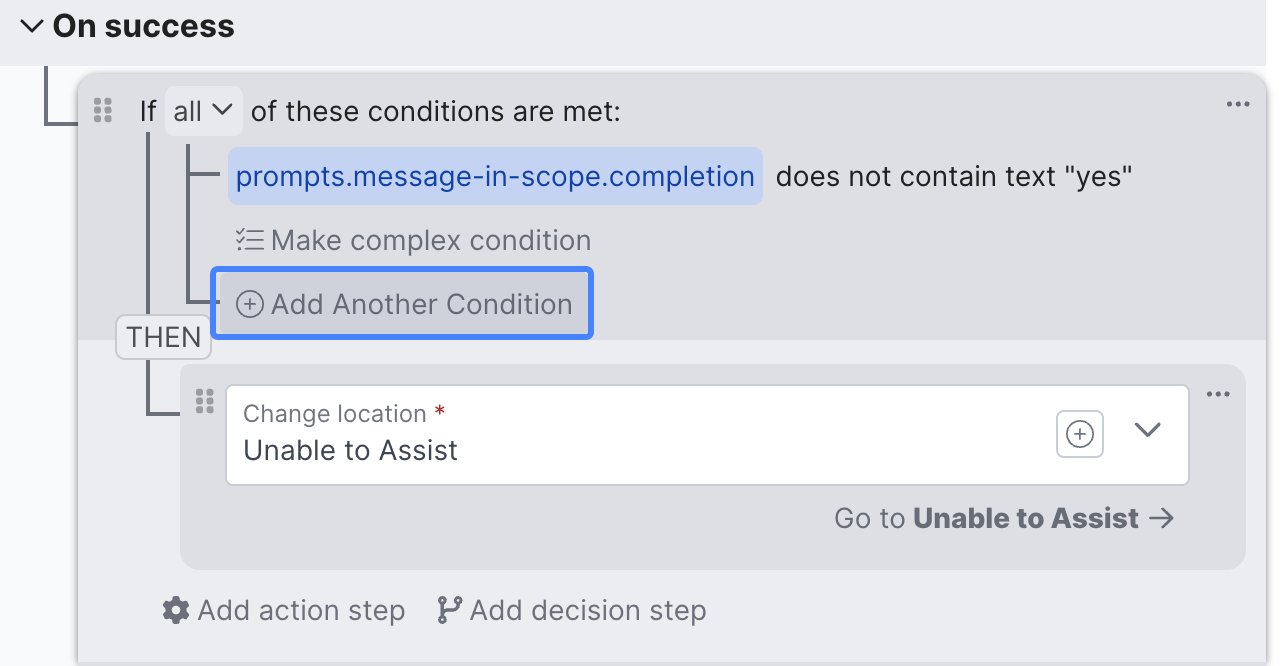

Then select the Add Another Condition button:

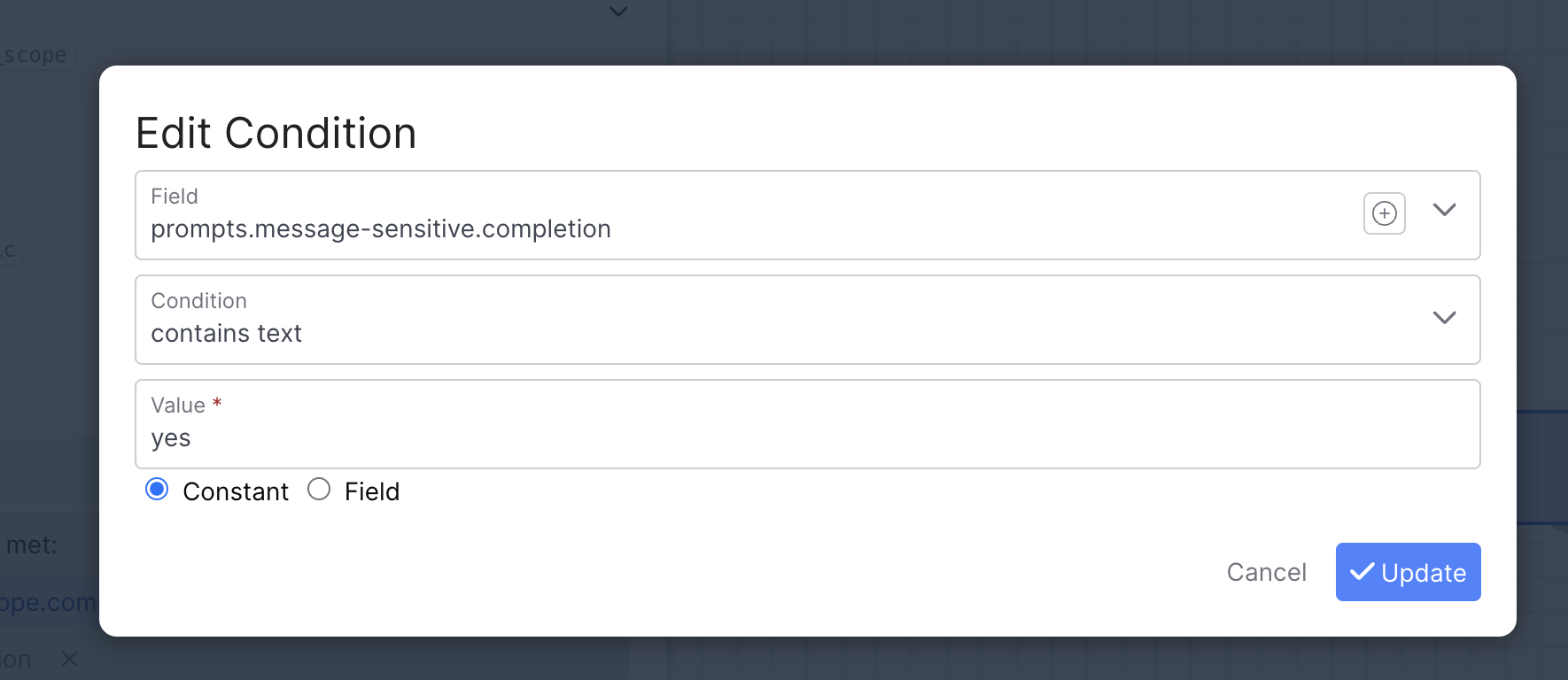

From there, search for the message-sensitive prompt you just created, change the Condition to contains text, and then set the Value to yes:

The last thing you'll need to do is toggle if **all** of these conditions are met to if **any** of these conditions are met:

Congratulations 👏 your Examine Customer Message behavior will now be able to identify and handle sensitive inquiries, helping to control the scope of our assistant.

Not enough evidence

Now send in another phrase, something like:

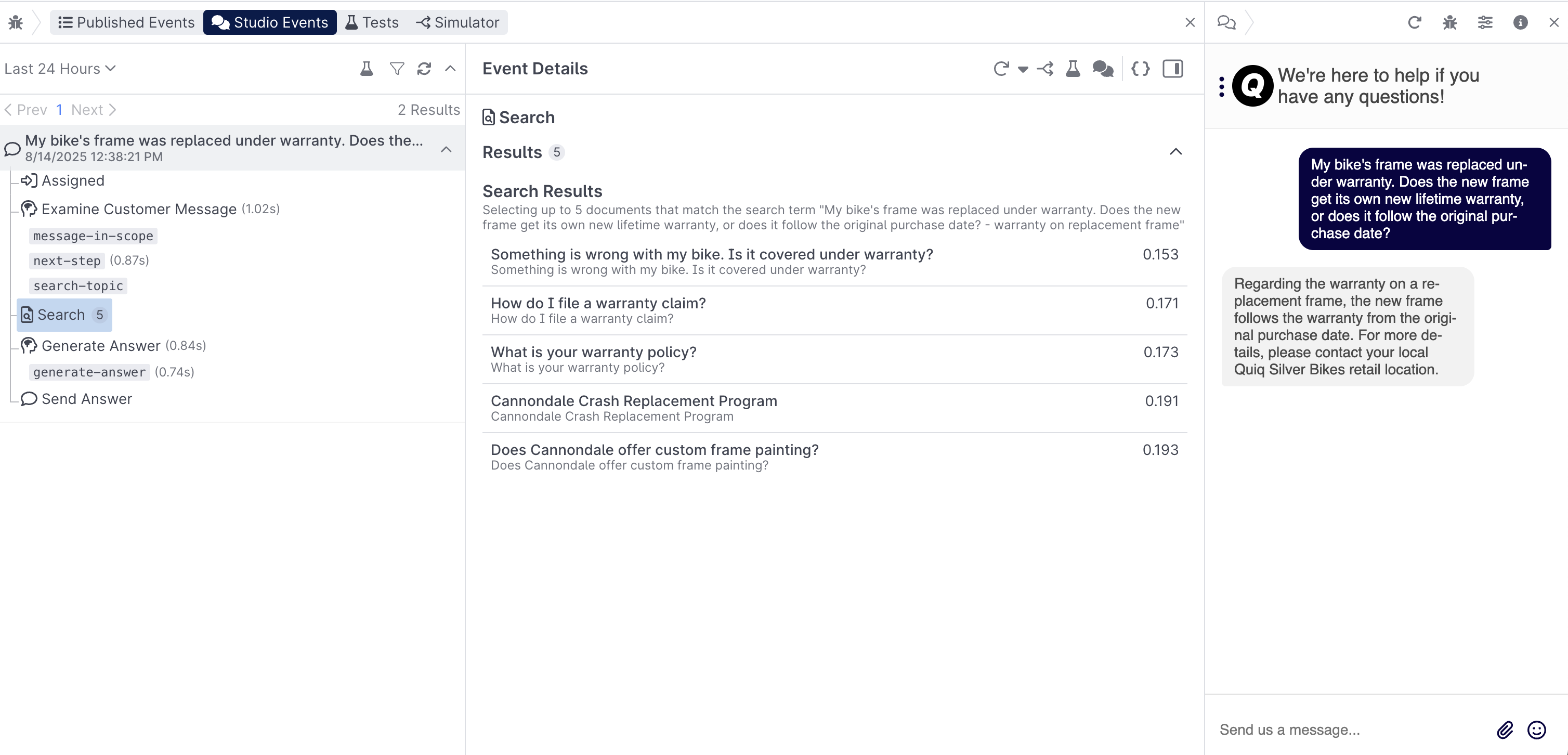

My bike's frame was replaced under warranty. Does the new frame get its own new lifetime warranty, or does it follow the original purchase date?

Pay attention both to the response generated, as well as the search results that supposedly verify the statement the assistant made:

If you click into each of the results returned, you will not find evidence for the claim the assistant has made. In the next lesson we'll cover how to prevent your assistant from delivering answers that are not verified by evidence from your dataset.

Extra CreditTry coming up with some additional questions that you think your agent doesn't have the data to answer, but may attempt to answer anyway.

Key Concepts

Resource Search

- The Resource Search tool enables you to search an index with conversational phrases and see what documents get returned Learn more.

Search Behavior

- The Search Behavior enables you to use Retrieval Augmented Generation (RAG) to retrieve information from your knowledge base or other brand approved sources that have been uploaded, transformed, and indexed in AI Resources Learn more.

Building Prompts

- Prompts can be created using the no code prompt editor, and you can insert special content using the left hand tool panel or curly brackets.

- You can use the completions of your prompts in the On success portion of your behavior to change locations in your flow, send messages, and more.

What's Next

Congratulations, you finished the third lesson! You should be getting a handle on how you can ground your assistant with prompting techniques, RAG and rules. In Lesson 4 you'll learn how to take your grounding skills to the next level.

Updated 3 months ago